What Tools Are Available for Web Scraping

With web scraping, data can be automatically extracted from websites and used for various purposes, including market research, competition analysis, and content aggregation. With the increasing demand for data-driven insights, web scraping has become essential for data collection and analysis. However, choosing the right web scraping tool can be a formidable task, given the vast array of available tools. The tool selection depends on various factors, including the nature of the project, the technical expertise of the user, and the type of data to be extracted. Several web scraping tools are available, ranging from simple browser extensions to complex programming libraries. Some tools are free, while others require a subscription fee. Free web scraping tools such as BeautifulSoup and Scrapy provides a good starting point for beginners but lack advanced features such as automatic IP rotation and anti-bot detection. Paid web scraping tools such as Octoparse and ParseHub offer advanced features such as automatic IP rotation, anti-bot detection, and cloud-based data storage but may require a subscription fee. It is important to be mindful of legal and ethical considerations when using web scraping tools, test their scraping code before running it on a large scale, and consider the bandwidth used. In this blog article, we will discuss some of the most popular tools available for web scraping, their features, and their limitations. Regardless of your level of web scraping expertise, there is a tool that can satisfy your requirements, whether you are a novice or an expert web scraper.

BeautifulSoup:

BeautifulSoup is a Python library required for web scraping to pull data from HTML and XML files. It builds a parse tree for parsed pages that can extract data in a hierarchical and more readable manner. This library provides simple methods for navigating and searching a parse tree created from an HTML or XML document. It can also work with broken HTML code, which makes it a powerful tool for web scraping.

BeautifulSoup is easy to learn and use, making it a popular choice for beginners. It is also open-source and has many users participating in its development. However, it does not have some advanced features that other web scraping tools have, such as automatically handling cookies and JavaScript.

Scrapy:

Scrapy is an open-source Python library used to extract data from websites. It provides a complete framework for scraping websites, including the ability to handle cookies, JavaScript, and other dynamic content. Scrapy allows you to define how to extract data from a website using XPath or CSS selectors.

Scrapy also provides automatic throttling, automatic retries, and built-in support to handle common web scraping tasks. It has a large and active community of users and contributors who support and contribute to its development.

Scrapy is a more complex tool than BeautifulSoup and requires some programming knowledge to use effectively. However, once you learn how to use Scrapy, it can be a very powerful tool for web scraping.

Selenium:

Selenium is a browser automation tool often used for web scraping. It allows you to control a browser programmatically, which means you can interact with web pages in case you use a browser. That makes it possible to scrape websites that require user interaction, such as logging in or filling out forms.

Selenium supports multiple programming languages, like Python, Java, and JavaScript. It also supports multiple browsers, including Chrome, Firefox, and Safari.

Selenium is a potent tool for web scraping, but it can be more complex to set up and use than other tools. It also requires a web driver to be installed for each browser you want to use, which can add complexity.

Octoparse:

Octoparse provides a user-friendly interface for non-programmers to scrape data from websites. It has a drag-and-drop interface allows you to define the data you want to scrape visually. Octoparse also provides built-in support for handling common web scraping tasks, such as handling JavaScript and pagination.

Octoparse is a cloud-based tool, which means you don’t require to install any software on your computer to use it. It also provides automatic IP rotation, which can help you avoid getting blocked by websites.

Octoparse is a good choice for non-programmers who want to scrape data from websites quickly and easily. However, it may not be as flexible as other tools and requires a subscription fee.

ParseHub:

ParseHub tool provides a visual interface for defining the data you want to scrape. It allows you to visually select elements on a web page and define how to extract data from them. ParseHub also provides built-in support for handling common web scraping tasks, such as handling JavaScript and pagination.

ParseHub is a cloud-based tool, which means you don’t need to install software on your computer to use it. It also provides automatic IP rotation, which can help you avoid getting blocked by websites.

ParseHub is a good choice for non-programmers who want to scrape data from websites quickly and easily. However, it may not be as flexible as other tools and requires a subscription fee.

WebHarvy:

WebHarvy is a desktop-based web scraping tool that allows you to extract data from websites using a visual interface. It provides built-in support for handling common web scraping tasks, such as handling JavaScript and pagination. WebHarvy also allows you to export data in various formats, including CSV, Excel, and JSON.

WebHarvy is easy to use and does not require any programming knowledge. However, it may not be as powerful as other tools and requires a one-time fee.

OutWit Hub:

OutWit Hub is a browser extension for Chrome and Firefox that allows you to extract data from websites using a visual interface. It provides built-in support for handling common web scraping tasks, such as handling JavaScript and pagination. OutWit Hub also allows you to export data in many formats, including CSV, Excel, and JSON.

OutWit Hub is easy to use and does not require any programming knowledge. However, it may not be as powerful as other tools and is limited to only working within the browser.

Apify:

Apify is a cloud-based web scraping platform that allows you to extract data from websites using a visual interface or custom scripts. It provides built-in support for handling common web scraping tasks, such as handling JavaScript and pagination. Apify also allows you to export data in various formats, including CSV, Excel, and JSON.

Apify is a powerful tool for web scraping, but it can be more complex than other tools. It also requires a subscription fee, and the pricing can be expensive for larger projects.

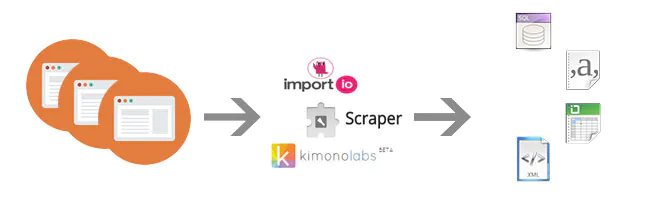

Import.io:

Import.io is a web-based platform that allows you to extract data from websites using a visual interface or custom scripts. It provides built-in support for handling common web scraping tasks, such as handling JavaScript and pagination. Import.io also allows you to export data in different formats, including CSV, Excel, and JSON.

Import.io is a powerful tool for web scraping, but it can be more complex than other tools. It also requires a subscription fee, and the pricing can be expensive for larger projects.

Some Key Considerations

When using web scraping tools, there are several important considerations to remember to ensure that you are using the tool effectively and legally. Here are a few key considerations:

Follow website terms of service: Before scraping any website, review the website’s terms of service to ensure that you don’t violate any rules or agreements. Some websites prohibit web scraping, while others may require you to obtain permission or limit the amount of data you can extract.

Respect website bandwidth: Web scraping can significantly strain a website’s servers, so it’s important to consider the bandwidth you use. Avoid scraping large amounts of data at once, and consider using tools that allow you to set crawl rate limits.

Use proxies: Using proxies can help you avoid IP blocking or other issues that may arise when scraping websites. Proxies allow you to make requests from different IP addresses, making it harder for websites to detect and block your scraping activity.

Test your scraping code: Before running your scraping code on a large scale, it’s important to test it on a small sample of data to ensure it works as expected. That helps you catch errors or issues before they become more significant problems.

Be mindful of legal considerations: Web scraping can be a legal grey area, and it’s important to be aware of any legal considerations or regulations that may apply to your project. For example, some websites may have copyright or data privacy restrictions that limit the types of data you can extract.

Considering these considerations, you can use web scraping tools effectively and ethically to extract valuable data for your project.

Conclusion:

To sum up, Web scraping is a powerful tool for extracting data from websites. Many tools are available for web scraping, ranging from simple browser extensions to complex programming libraries. The choice of tool depends on your requirements, programming knowledge, and budget. If you are a beginner or a non-programmer, visual tools like Octoparse, ParseHub, and WebHarvy may be the best option. If you are an experienced programmer, tools like BeautifulSoup, Scrapy, and Selenium may be more suitable. Selecting the right tool for your project is essential to ensure that you can extract the data you need efficiently and effectively.