How Can I Extract Data From A Website

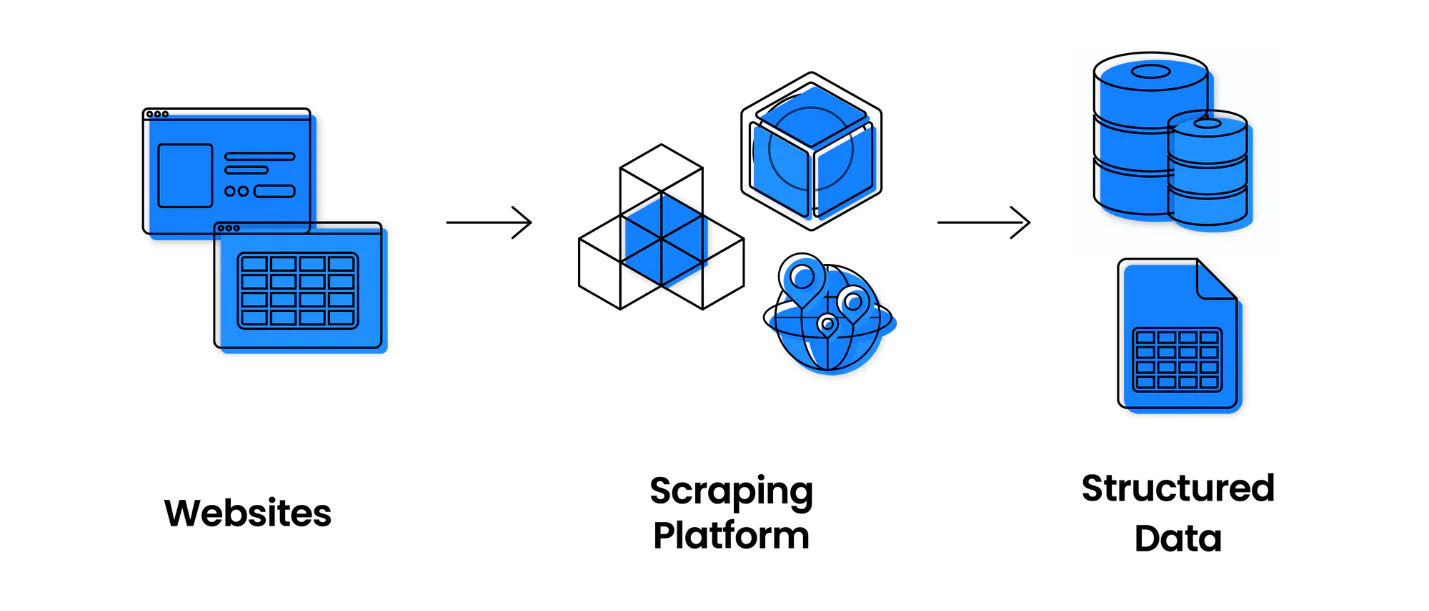

In today’s digital era, the vast amount of information available online has made data extraction from websites a valuable skill for individuals and corporations alike. Web scraping, also known as website data extraction, involves the process of collecting and extracting relevant data from web pages, such as customer reviews, product details, contact information, and more. This process utilizes tools and techniques to send requests to website servers, retrieve data from the responses, and analyze and parse it to extract the desired information. While web scraping can be performed manually, software tools like web scrapers or web crawlers enhance efficiency. The extracted data can be leveraged for market research, price comparison, lead generation, and content creation, making web scraping an indispensable tool in the era of big data. However, practicing web scraping ethically and ensuring compliance with legal regulations is crucial.

Why Do You Need Web Scraping?

Web scraping is now a crucial tool for numerous organizations and industries. Here are some of the reasons why web scraping is important:

Business Intelligence: Companies can use web scraping to gather data about their competitors, market trends, and consumer behavior. They can use this information to make wise choices on creating new products, pricing schemes, and marketing initiatives.

Lead Generation: Web scraping can help businesses generate leads by extracting website contact information. This information can then be used for sales and marketing purposes.

Research: Web scraping can be used for research purposes, such as analyzing social media trends or monitoring news articles. Researchers can use this data to identify patterns and draw insights that can be used for academic purposes or to inform policy decisions.

Content Aggregation: Web scraping can aggregate content from multiple sources and create a centralized database. That can be useful for news websites, online marketplaces, and other platforms that require a large amount of data.

Price Monitoring: Retailers can use web scraping to monitor prices on competitor websites and adjust their pricing accordingly. That can help them stay competitive in the market and attract more customers.

Data Analysis: Web scraping can collect data for data analysis and machine learning models. That can help businesses make predictions about consumer behavior, identify outliers, and detect patterns in large datasets.

Is Web Scraping Legal?

Web scraping can be controversial, as it involves collecting data from websites without the website owner’s consent. The legality of web scraping is influenced by several variables, including the reason for the scraping, the type of data being scraped, and the website’s terms of service. In general, web scraping is legal if done ethically and in compliance with legal regulations. Web scraping may be against the law if it infringes on someone else’s copyright, intellectual property rights, or privacy rights. Before engaging in web scraping, it is crucial to seek legal advice.

Web Scraping Tools and Techniques

To perform web scraping effectively, one can go for some efficient tools and techniques as listed below:

Beautiful Soup:

Beautiful Soup is a Python library widely used for web scraping. It provides an easy-to-use interface for parsing HTML and XML documents. Beautiful Soup allows you to extract specific data from web pages by navigating the parse tree and searching for elements based on tags, attributes, or text. It handles common web scraping challenges like malformed HTML and encoding issues.

Selenium:

Selenium is an effective tool for web scraping that provides a browser automation framework. It allows you to control web browsers programmatically and interact with web elements dynamically. Selenium is particularly useful for scraping websites that heavily rely on JavaScript for rendering content. It can replicate user actions, such as clicking buttons and filling out forms, to scrape data from dynamically generated pages.

Scrapy:

Scrapy is a comprehensive web scraping framework for Python. It exhibits a complete set of tools for building web scrapers in a structured and scalable manner. Scrapy handles the entire scraping process, including making HTTP requests, parsing HTML/XML, following links, and storing the scraped data. It also supports features like handling cookies, sessions, and user authentication.

Puppeteer:

Puppeteer is a Node.js library that provides a high-level API for controlling the Chrome or Chromium browser. It allows you to automate tasks in the browser environment, including web scraping. Puppeteer provides a rich set of features for interacting with web pages, such as generating screenshots, generating PDFs, and handling navigation events. It is particularly useful for scraping websites that heavily rely on client-side rendering.

Proxies and CAPTCHA solving:

Handling potential challenges like IP blocking and CAPTCHA verification is essential when performing web scraping at scale. Proxy servers can be used to rotate IP addresses and prevent getting blocked by websites. CAPTCHA solving services, such as AntiCaptcha and 2captcha, can help automate the process of solving CAPTCHA challenges encountered during scraping.

API-based scraping:

Some websites offer APIs (Application Programming Interfaces) that allow developers to access their data in a structured and authorized manner. Instead of scraping web pages directly, you can interact with the API endpoints to retrieve the desired data. API-based scraping is usually faster, more reliable, and legally compliant than traditional web scraping techniques.

Fundamental Steps for Web Scraping

Considering the tools, techniques, and best practices discussed earlier, let’s go through a step-by-step process for extracting data from a website.

Step 1: Identify the target website:

The first step in scraping is identifying the website from which you want to scrape data. Take the following factors into consideration:

Study website structure: Understand how the website is organized, including URLs and HTML elements.

Determine data requirements: Identify the specific information you need, such as text, images, or links.

Check terms of service: Review website guidelines to ensure web scraping is allowed and comply with any restrictions.

Handle authentication: If required, consider how to manage authentication or login credentials for accessing data.

Step 2: Choose the appropriate tool:

Select a web scraping tool based on your requirements. If the website heavily relies on JavaScript, consider using tools like Selenium or Puppeteer. For simpler HTML parsing, Beautiful Soup or Scrapy may be more suitable.

Step 3: Inspect the website:

This step involves using your browser’s developer tools to inspect the HTML structure of the web page you want to scrape. Identify the relevant elements (tags, classes, or IDs) containing the needed data. This information will be used in the scraping process.

Step 4: Set up the environment:

Install the chosen web scraping tool, along with any necessary dependencies. For example, if you’re using Beautiful Soup or Scrapy, make sure you have Python installed. If you’re using Puppeteer, ensure you have Node.js installed.

Step 5: Write the scraping code:

Depending on your selected tool, write the necessary code to initiate the scraping process. Use the tool’s documentation and examples to guide you. Here is a basic example using Beautiful Soup in Python:

Python

import requests from bs4 import BeautifulSoup # Send a GET request to the webpage response = requests.get(“https://example.com”) # Create a BeautifulSoup object to parse the HTML content soup = BeautifulSoup(response.text, “html.parser”) # Extract the desired data using the BeautifulSoup methods title = soup.title.text paragraphs = soup.find_all(“p”) # Process or store the extracted data as needed print(“Title:”, title) for p in paragraphs: print(“Paragraph:”, p.text)

Step 6: Handle dynamic content (if necessary):

If the website relies heavily on JavaScript to render content, you may need to use tools like Selenium or Puppeteer to interact with the web page and extract the dynamic content. That involves simulating user actions like clicking buttons, scrolling, or filling out forms.

Step 7: Implement data storage and processing:

Decide how you want to store the scraped data. You can write it to a file (e.g., CSV, JSON), store it in a database, or process it directly within your code. Ensure you handle the data securely and comply with applicable data privacy regulations.

Step 8: Handle challenges and edge cases:

Web scraping may encounter challenges like IP blocking, CAPTCHA verification, or rate limiting. To overcome these, consider using proxies to rotate IP addresses, employing CAPTCHA-solving services, or implementing strategies to handle rate limits imposed by the website.

Step 9: Respect website policies and legal considerations:

Before scraping a website, review its terms of service and ensure that your scraping activities comply with legal and ethical standards. Respect website policies regarding crawling frequency, content usage, and user privacy.

Step 10: Test and monitor your scraping process:

Regularly test your scraping code and monitor its performance. Be prepared to make adjustments if the website’s structure or behavior changes.

Conclusion

Web scraping as a website data collector and analyzer has evolved into a vital tool for numerous enterprises and industries. Still, it must be carried out morally and per legal regulations. Various tools and techniques are available for web scraping, ranging from simple manual methods to sophisticated software tools. To avoid legal and ethical issues, it is important to follow best practices, such as respecting website terms of service, using ethical web scraping techniques, using reliable web scraping tools, and monitoring web scraping activities. With these best practices in mind, web scraping can be a valuable and effective data collection and analysis tool.