How To Use Data Mining For Text Analysis And Sentiment Analysis

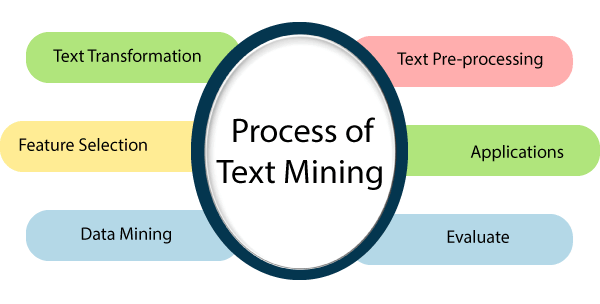

Data mining is an influential aspect of tasks like the extraction of meaningful patterns, trends and insights through extensive raw data. When data mining is applied to texts, it can alter the unstructured information into structured on to help organizations, individual researchers and analysts in decision-making. A valid analysis of text data gathered from sources such as social media sites, news articles or customer feedback can provide you with an abundance of useful information. Text and sentiment analysis are the most common areas in which data mining is applied. The process of text analysis is about getting an understanding of text content by simplifying it into structured formats and determining the essential keywords or topics. Correspondingly, a sentiment analysis goes deeper to explore the emotional tone behind the given text; it can detect all kinds of tones, including positive, negative, or even neutral. The application of data mining in text and sentiment analysis helps better capture trending trends, public opinions, and other essential insights from a textual data form.

Data Mining For Text Analysis

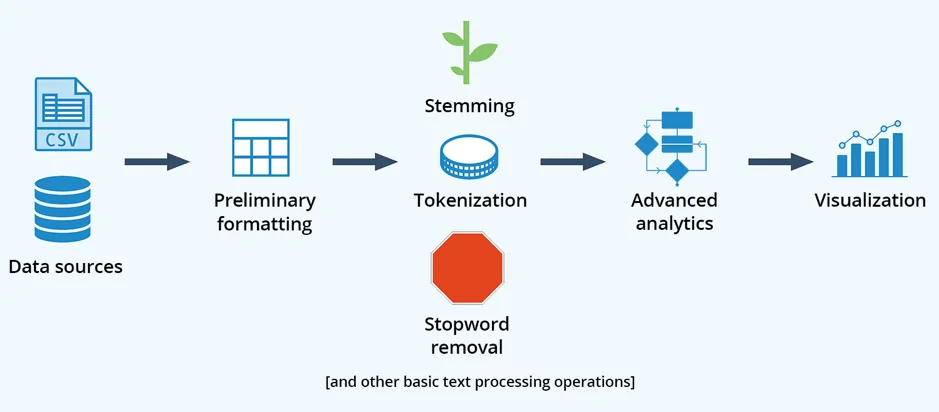

Data mining is used in the process of text analysis, transforming unstructured data into structured and meaningful information.text analysis is vital for fields like social media analysis, marketing and business intelligence, which require effective analysis of large text data like emails, customer reviews, and comments. The detailed steps of text analysis via data mining are as follows.

Step 1: Text Processing

Before analysis, the text is to be cleaned from any noises, including punctuations, stop words or any special characters. All these things are of no role as meaningful information. The following phases can clean text from these unnecessary things.

The first phase is tokenization, which is about simplifying the given text in the form of words or tokens. The code provided below can help break complex sentences into small units or words and unify them while converting them into lowercase.

from nltk.tokenize import word_tokenize

# Sample text

text = “Data mining is essential for text and sentiment analysis!”

# Tokenize the text

tokens = word_tokenize(text.lower())

print(tokens)

After tokenization, the next phase is to remove punctuation and stopwords. Both of these carry no specific significance in text analysis. The code that can be used here is:

from nltk.corpus import stopwords

import string

# Load stopwords

stop_words = set(stopwords.words(‘english’))

# Remove stopwords and punctuation from tokens

filtered_tokens = [word for word in tokens if word not in stop_words and word not in string.punctuation]

print(filtered_tokens)

The third phase of text processing is about stemming and lemmatization, which is used for word reduction into root form and ensuring linguistic correctness. The following code illustrates the lemmatization process.

from nltk.stem import WordNetLemmatizer

# Initialize the lemmatizer

lemmatizer = WordNetLemmatizer()

# Lemmatize tokens

lemmatized_tokens = [lemmatizer.lemmatize(word) for word in filtered_tokens]

print(lemmatized_tokens)

Step 2: Feature Extraction

After you have processed the text, the next step is to convert it into an organized format to make it analyzeable in data mining algorithms. The feature extraction step changes text information into numerical features that contain the meaning of the text.

The BoW (Bag of Words) mode is one of the best approaches for feature extraction, which can depict text as a pack of words and their frequencies, overlooking linguistic use and word order. In this model, each text is denoted as a vector, where each position conforms to a word within the vocabulary. Here’s the code to make a BoW model with Python:

from sklearn.feature_extraction.text import CountVectorizer

# Sample documents

docs = [“Data mining is essential.”, “Text analysis requires preprocessing.”, “Sentiment analysis is important.”]

# Create a CountVectorizer instance

vectorizer = CountVectorizer()

# Fit and transform the documents into a bag-of-words representation

X = vectorizer.fit_transform(docs)

# Display the bag-of-words model as an array

print(X.toarray())

Though the Pack of Words model counts word occurrences, it doesn’t account for the significance of words over multiple documents. On the other hand, TF-IDF could be a more progressed strategy that allows weight to words according to their recurrence in a document based on how frequently they show up over the whole dataset. The code snippet to create the TF-IDF model is :

from sklearn.feature_extraction.text import TfidfVectorizer

# Create the TF-IDF vectorizer

tfidf_vectorizer = TfidfVectorizer()

# Fit and transform the documents into a TF-IDF matrix

tfidf_matrix = tfidf_vectorizer.fit_transform(docs)

# Display the TF-IDF matrix as an array

print(tfidf_matrix.toarray())

Step 3: Text Clustering

Clustering is an autonomous learning technique utilized to make groups of relative documents together. For text analysis, clustering is valuable for finding patterns or themes over expansive datasets, like in customer reviews or social media comments.

The most famous clustering algorithm is K-means, which segments the information into k clusters, in which each document is allocated to the cluster whose centroid is adjacent to it. The following code is used to cluster text with K-means:

from sklearn.cluster import KMeans

# Define the number of clusters

k = 2

# Apply K-Means clustering

kmeans = KMeans(n_clusters=k)

kmeans.fit(tfidf_matrix)

# Print the cluster assignments

print(kmeans.labels_)

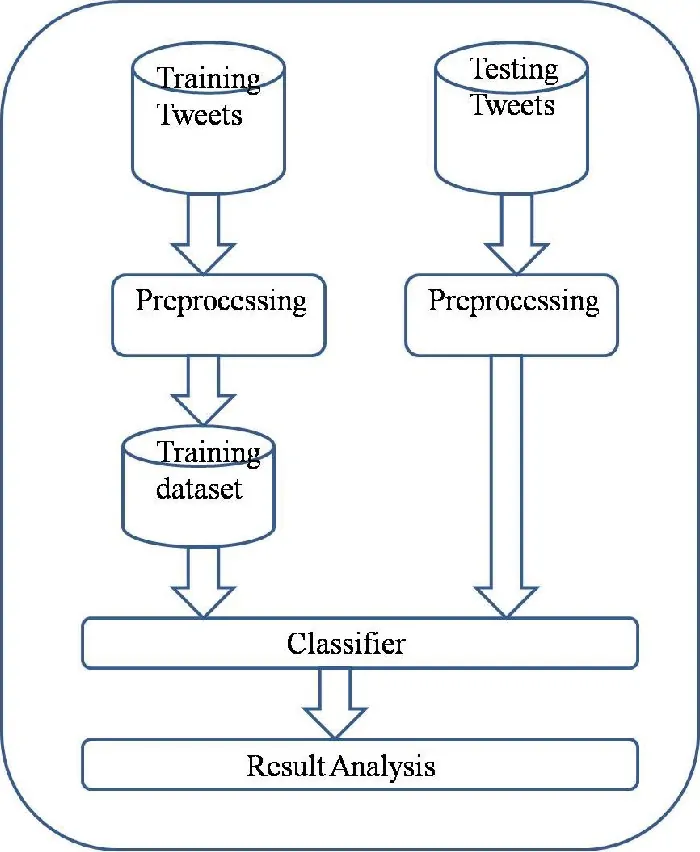

Step 4: Text Classification

Text classification is the last step in text analysis, which issues predefined names or categories to text information. Different from clustering, which is unsupervised, classification could be a well-directed learning errand that requires labelled training information. Spam detection is a familiar example of text classification in which emails are classified either as spam or as not spam.

For classifying a text Naive Bayes algorithm is commonly utilized. It is a basic however compelling, particularly for chores like spam filtering or analyzing sentiment. The following code demonstrates text classification with Naive Bayes algorithm using Python:

from sklearn.naive_bayes import MultinomialNB

from sklearn.model_selection import train_test_split

from sklearn.datasets import fetch_20newsgroups

# Fetch a sample dataset of news articles

newsgroups = fetch_20newsgroups(subset=’train’, categories=[‘sci.space’, ‘rec.autos’])

# Split the dataset into training and test sets

X_train, X_test, y_train, y_test = train_test_split(newsgroups.data, newsgroups.target, test_size=0.3, random_state=42)

# Transform the text data into TF-IDF features

tfidf_X_train = tfidf_vectorizer.fit_transform(X_train)

tfidf_X_test = tfidf_vectorizer.transform(X_test)

# Train a Naive Bayes classifier

classifier = MultinomialNB()

classifier.fit(tfidf_X_train, y_train)

# Test the model and print the accuracy

accuracy = classifier.score(tfidf_X_test, y_test)

print(f’Classification Accuracy: {accuracy}’)

Data Mining For Sentiment Analysis

Sentiment analysis, also called opinion mining, includes evaluating the emotional tone passed on by the text information. With data mining, sentiment analysis can be executed through various techniques; the most common ones are lexicon-based approaches and machine learning models. The precise, step-by-step process to perform sentiment analysis are a s follows:

Step 1: Using Lexicon-Based Analysis

Lexicon-based sentiment analysis employs predefined word references or lexicons in which each word is related to sentiment scores. This strategy surveys the overall sentiment of content by computing the sentiment scores of separate words and aggregating them.

Python Code To Analyze Sentiment Utilizing Lexicon-Based Approach:

from nltk.sentiment import SentimentIntensityAnalyzer

# Initialize the sentiment analyzer

sia = SentimentIntensityAnalyzer()

# Sample text for sentiment analysis

text = “The product is amazing and I absolutely love it!”

# Perform sentiment analysis

sentiment = sia.polarity_scores(text)

print(sentiment)

Step 2: Preparing Data

Before you apply machine learning models, it’s important to make ready the text data. It includes cleaning the text, evacuating insignificant content, and changing it into a format appropriate for analysis. Essential tasks incorporate tokenization, expelling stopwords, and vectorization.

Python Code To Change Content Information Into Numerical Format:

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.datasets import load_files

from sklearn.model_selection import train_test_split

# Load a dataset of text samples with sentiment labels

reviews = load_files(‘txt_sentoken’) # Directory with text files of movie reviews

X_train, X_test, y_train, y_test = train_test_split(reviews.data, reviews.target, test_size=0.25, random_state=42)

# Convert text to a matrix of token counts

vectorizer = CountVectorizer()

X_train_counts = vectorizer.fit_transform(X_train)

X_test_counts = vectorizer.transform(X_test)

Step 3: Using Machine Learning Based Analysis

This step includes the training of machine learning models to classify text as specified by sentiment. It includes utilizing labelled datasets to instruct the model on how to distinguish between positive, negative, and neutral assumptions. Different algorithms, like logistic regression, support vector machines and neural systems, can be utilized.

Python Code For Training And Assess A Sentiment Analysis Model:

from sklearn.linear_model import LogisticRegression

# Initialize and train the logistic regression model

classifier = LogisticRegression()

classifier.fit(X_train_counts, y_train)

# Test the model’s accuracy

accuracy = classifier.score(X_test_counts, y_test)

print(f’Sentiment Analysis Accuracy: {accuracy}’)

Step 4: Model Evaluation And Interpretation

After you have trained the model, the last step of sentiment analysis is to assess the model’s performance and interpret the results. It is about looking at metrics of accuracy, precision, recall, and F1-score, and assessing any misclassifications to progress the model.

Python Code For Generating And Printing Classification Report:

from sklearn.metrics import classification_report

# Predict sentiments on the test set

y_pred = classifier.predict(X_test_counts)

# Generate and print a classification report

report = classification_report(y_test, y_pred, target_names=reviews.target_names)

print(report)

Conclusion:

To sum up, unstructured raw texts can be transformed into a structured format for text and sentiment analysis via data mining, allowing for the discovery of novel insights and significant trends. Large textual datasets can be analyzed using text-mining techniques to uncover hidden links, patterns, and important topics. Data mining techniques in sentiment analysis help evaluate and categorize sentiments. Businesses can use sentiment analysis to determine what consumers think about particular brands, products, or services based on comments and discussions they see online. Eventually, data mining becomes a potent tool for textural analysis both in individual and organizational research.