How To Scrape Websites With Multi-Level Pagination

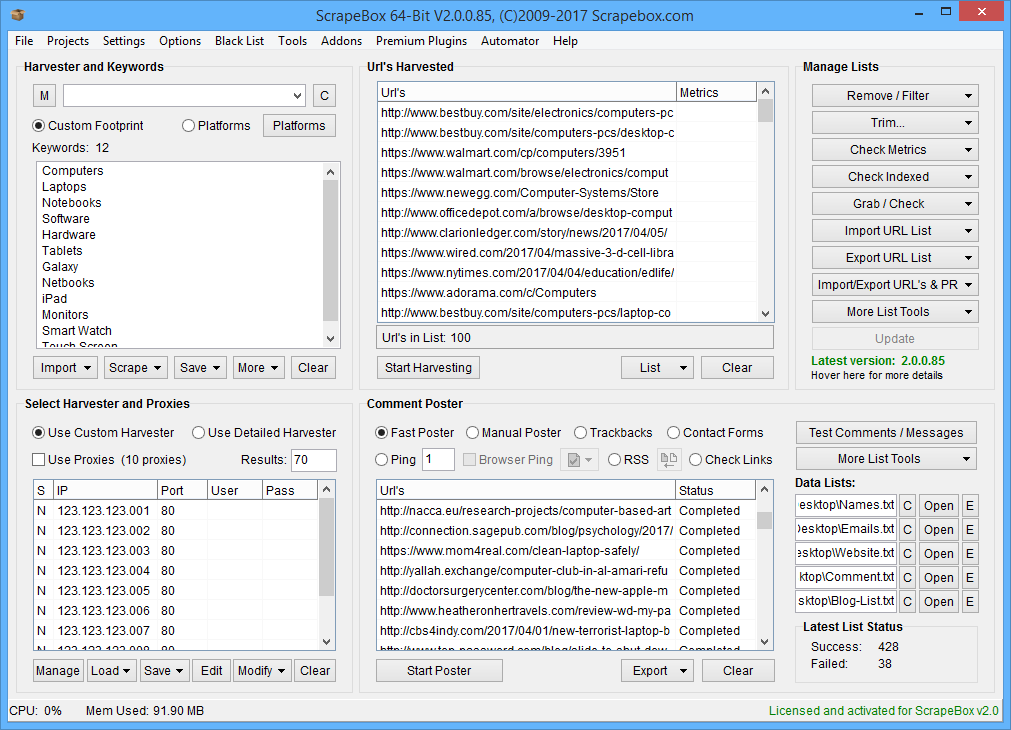

Web scraping is a critical approach to extracting valuable data, especially vast ones, from various sites. Besides, there are a number of tools and techniques available to help developers scrape even large volumes of data efficiently. Yet, to scrape data from sites that contain multiple page structures or multi-level pagination, the scraping task becomes much more complex than ever. Those websites having multiple pages have divided data across multiple pages with multiple layers of content or nested pagination. To scrape this kind of content, the simple scraping strategies may fail, thus mandating the use of refined approaches. The major considerations include vigilant analyses of URL structures, potent coding solutions, proficiency in dealing with IP blocks, broken links, infinite scrolling setups, and access to essential scrapping tools, including Scrapy, Selenium and Beautiful Soup. Also, the knowledge and skill of some additional techniques required for navigation, automation and data capturing are critical. This blog will further provide a step-by-step process, highlighting the necessary techniques and tools to facilitate the process of scraping websites with multi-level pagination.

Step 1: Inspecting The Website Structure

The initial step in scraping a website with multi-level pagination is to carefully look at its structure. Begin by heading to the target webpage in your browser and finding the pagination controls. These controls are typically found at the foot of a page, shown as page numbers or next and previous options. Right-click on the pagination controls and choose Inspect in Chrome to access the Developer Tools, where you’ll be able to see the fundamental HTML code of the page.

Concentrate on the elements related to pagination. You will likely see tags surrounding page numbers or navigation links with particular class names or IDs. Attend to how the page numbers are increased within the URL or how diverse pages are shown within the structure. Seek for patterns, like a query parameter including ?page=1, ?page=2, etc. If the site employs JavaScript for pagination, catch any dynamic calls or event listeners concerned.

Grasping the precise structure of the pagination will assist you later when automating the scraping process, guaranteeing that each ensuing request goes to the accurate page.

Step 2: Extracting Information From The First Page

After inspecting the pagination structure, the following step is to extract information from the primary page of the website. For that, you will be required to hand an HTTP request to the URL of the primary page. For web scraping, one of the most commonly utilized libraries in Python is requests. The following is an example of sending a GET request and extracting the content:

import requests

from bs4 import BeautifulSoup

# URL of the first page

url = ‘https://example.com/page=1’

# Send GET request to fetch the page content

response = requests.get(url)

# Check if the request was successful

if response.status_code == 200:

# Parse the page content using BeautifulSoup

soup = BeautifulSoup(response.text, ‘html.parser’)

# Example: Extract all items from a specific class (e.g., product listings)

items = soup.find_all(‘div’, class_=’item’)

# Process the extracted data

for item in items:

title = item.find(‘h2’).get_text()

print(title)

else:

print(f”Failed to retrieve the page. Status code: {response.status_code}”)

That script will fetch the primary page’s HTML, parse it, and extract the things or product listings. That process will be comparable for each page you are attempting to scrape later.

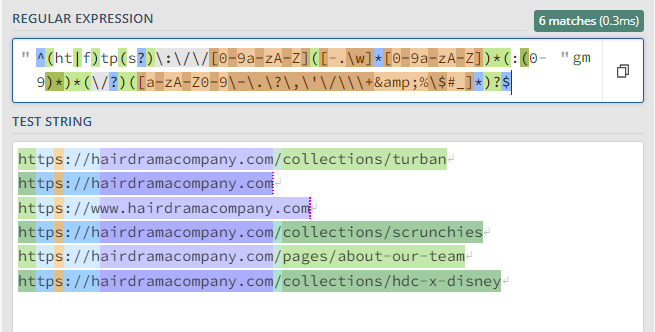

Step 3: Determining The URL Pattern

Once you have effectively extracted data from the first page, the third step is to determine the URL pattern utilized for pagination over numerous pages. Most sites use a uniform format for their page URLs, which can be altered by revising the page number or an identifier within the query string. By examining the first page’s URL configuration, you’ll determine the strategy to navigate between pages.

For instance, in case the first page URL for is:

https://example.com/products?page=1

Then the pagination pattern might implicate increasing the page number within the URL, like this way:

Page 2: https://example.com/products?page=2

Page 3: https://example.com/products?page=3

To automate that process, you’ll programmatically iterate through the pages. In some cases, pagination may not constantly utilize simple page numbers. Rather, there may be parameters such as ?start=10 or complex formats. For that, determining the precise mechanism needs a closer review of the site’s HTML or JavaScript code.

Moreover, some websites execute the Next or Previous options without revealing the genuine page numbers. Then, you will have to track the relative position and dynamically specify the URL from the HTML traits or metadata on the page. Via understanding the URL pattern, you will be able to move from page to page with negligible effort while scraping.

Step 4: Iterating Through The Pages

After identifying the URL pattern for pagination, the following action is to iterate through the pages and scrape information from each one. That process usually includes forming a loop that alters the page number or query parameter within the URL and fetches the content appropriately.

Below is an illustration of how to iterate through the pages utilizing Python and requests:

import requests

from bs4 import BeautifulSoup

# Base URL for pagination

base_url = ‘https://example.com/products?page=’

# Number of pages to scrape

num_pages = 5

for page_num in range(1, num_pages + 1):

# Construct the full URL for the current page

url = base_url + str(page_num)

# Send GET request to fetch the current page content

response = requests.get(url)

if response.status_code == 200:

# Parse the page content

soup = BeautifulSoup(response.text, ‘html.parser’)

# Extract data, e.g., items for this page

items = soup.find_all(‘div’, class_=’item’)

for item in items:

title = item.find(‘h2’).get_text()

print(title) # Process the extracted data

else:

print(f”Failed to retrieve page {page_num}. Status code: {response.status_code}”)

In the above example, the loop iterates over the pages by constructing the URL for each page number. For each page, the script sends an HTTP request, extracts the HTML content, and parses it with BeautifulSoup to retrieve information. You can adjust the loop to manage more complex pagination schemes, where the query options may alter for dynamic elements such as category, search filters, or infinite scrolling content.

That automated process will permit you to systematically scrape all the paginated pages by iterating through the whole range.

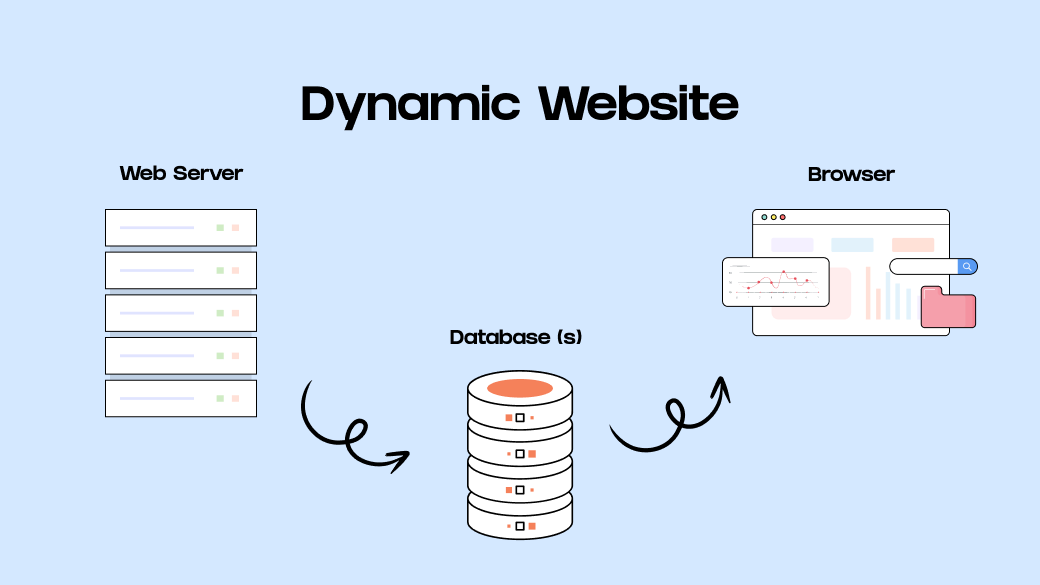

Step 5: Handling Dynamic Content Loading

Some sites dynamically load content utilizing JavaScript, indicating that the page does not have all the data you would like within the initial HTML response. Instead, content is loaded after the page’s rendering via JavaScript-based interactions or API calls. For such prospects, the basic tools, including requests or BeautifulSoup, may not be adequate for scraping all information.

To address that, you can employ web scraping tools that can run JavaScript and mimic user interaction. One generally employed library for that purpose is Selenium, which can automate a browser session, letting the page load as it could in a genuine browser.

The following is an example of operating dynamic content utilizing Selenium in Python:

from selenium import webdriver

from selenium.webdriver.common.by import By

from time import sleep

# Path to your WebDriver (e.g., chromedriver)

driver_path = ‘/path/to/chromedriver’

# Open the browser and navigate to the page

driver = webdriver.Chrome(executable_path=driver_path)

driver.get(‘https://example.com/products’)

# Wait for content to load

sleep(5) # You may adjust this time or use WebDriverWait to handle more complex scenarios

# Find elements after content loads (e.g., product listings)

items = driver.find_elements(By.CLASS_NAME, ‘item’)

for item in items:

title = item.find_element(By.TAG_NAME, ‘h2’).text

print(title) # Process the data

# Close the browser after scraping

driver.quit()

In the above example, Selenium launches a real browser, permitting JavaScript to operate and thoroughly load dynamic content. The script waits for the page load sleep(5) or WebDriverWait for more complex scenes, then extracts the data with Selenium’s tactics like find_elements and find_element. After data extraction is accomplished, the browser is to be shut, utilizing driver.quit().

That step is particularly essential for scraping sites that depend heavily on JavaScript to render content, like social media platforms, e-commerce sites, or news sites.

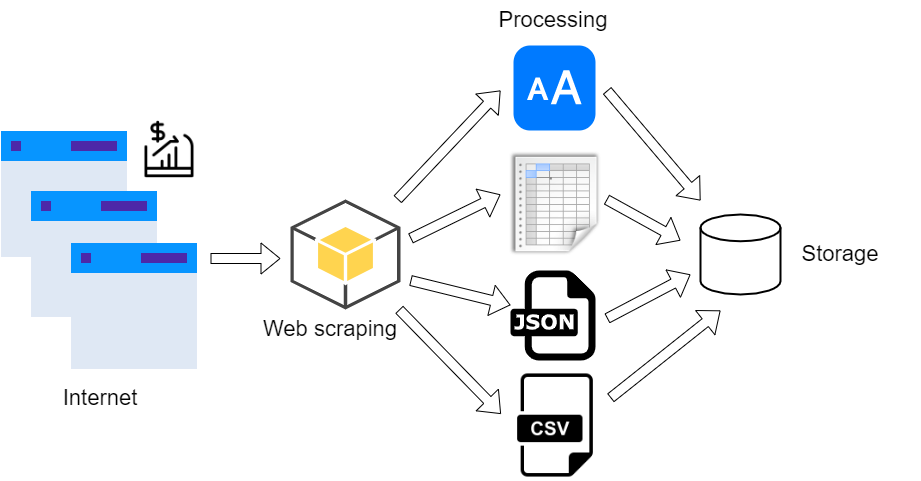

Step 6: Storing The Scraped Information

After completing the data scraping phase, storing it in an accessible format is paramount for future use. You can save the data as a CSV, JSON, or in a database, depending on the intricacy and volume of the data.

If you need to store data in CSV, you can use Python’s CSV module, which authorizes effortless data writing to a text file that can be opened in tools like Excel.

import csv

headers = [‘Title’, ‘Price’, ‘Link’]

with open(‘scraped_data.csv’, ‘w’, newline=”) as file:

writer = csv.writer(file)

writer.writerow(headers)

for item in items:

writer.writerow([item[‘title’], item[‘price’], item[‘link’]])

If you have complex data structures, JSON format is a more suitable option for operating Python’s JSON module as follows.

import json

data = [{‘title’: item[‘title’], ‘price’: item[‘price’], ‘link’: item[‘link’]} for item in items]

with open(‘scraped_data.json’, ‘w’) as json_file:

json.dump(data, json_file, indent=4)

For large datasets, look into utilizing a database such as SQLite to allow simple data management and querying.

import sqlite3

conn = sqlite3.connect(‘scraped_data.db’)

cursor = conn.cursor()

cursor.execute(”’CREATE TABLE IF NOT EXISTS products (title TEXT, price TEXT, link TEXT)”’)

# Insert items and commit changes

conn.commit()

conn.close()

Ultimately, you can select the storage approach that fits your project’s essentials.

Conclusion

In conclusion, pagination is commonly used by websites such as social networking, job boards, and e-commerce platforms to operate massive volumes of data. It would take too much memory and slow down downloads if everything was displayed on a single page. By dividing the content across several pages, pagination streamlines handling. While site administration greatly benefits from this strategy, data scraping from these sites becomes problematic. However, as scraping techniques have advanced, several strategies are available to address this concern. Moreover, the scraping tasks associated with multi-level pagination scraping can be made even simpler by following a methodical procedure like the one addressed in the above blog.