How To Scrape Data From SSL-Protected Websites

The Secure Sockets Layer or SSL encryption is a benchmark security and protection protocol that can verify a protected connection between a browser and a web server. SSL-protected sites contain URLs that commence with HTTPS instead of HTTP, which ensures that the encryption of that information is shared between the client and the server. This level of security is ideal for website protection from any unauthorized access or data theft, yet it sometimes becomes a great challenge for individuals and organizations looking to scrape such SSL-protected sites. Nevertheless, the use of common scraping tools or techniques may fall short as they result in encountering SSL errors or access, necessitating more refined scraping strategies. As a solution, this blog post will present a set of strategies that highlight the specific practices to help users smoothly scrape SSL-protected sites.

Step 1: Establishing The Environment

Create a suitable environment for your scraping task and begin with visiting python.org to download Python; for an effortless attempt consider following the instructions provided there.

After installing Python, you will have to access the necessary libraries. For that purpose, you will open your command prompt and execute the commands as follows:

pip install requests beautifulsoup4 lxml

Based on the above command:

The requests library helps you pass HTTP requests and to retrieve web pages.

The beautifulsoup4 is employed to parse data from HTML and XML documents.

The lxml functions as a powerful parser that can handle vast HTML or XML pages.

If you are looking for scraping sites with dynamic data loaded by Java scripts, you will have to use Selenium or Playwright. Look at the following command:

pip install selenium playwright

After you have installed the libraries, you can now move on to the next step, which is to send HTTPS requests.

Step 2: Sending The HTTPS Request

Use the requests library to help you retrieve the HTML content of that website.

Commence with importing the required libraries and making a request. You need to utilise the following commands.

import requests

url = “https://example.com” # Replace with the target website

headers = {

“User-Agent”: “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36”

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

print(“Request Successful!”)

print(response.text[:500]) # Print the first 500 characters of the page

else:

print(f”Failed to retrieve page. Status code: {response.status_code}”)

Moreover, you need to consider some essential points like:

Set a user-agent string to help you mimic the behaviour of a genuine browser since it is common for websites to block requests received from bots.

Inspect the response to make sure that your request returns a 200 status code; on the other hand, receiving a 403 or 429 as your status code means that the website holds an anti-scraping configuration.

To resolve issues related to connection or time-outs, you should employ try-except blocks.

This step will confirm that you securely retrieve information from an SSL-protected site before moving towards extracting the content.

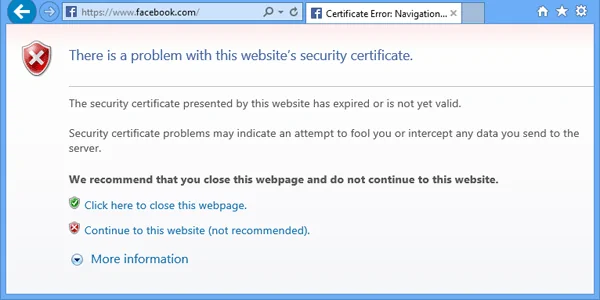

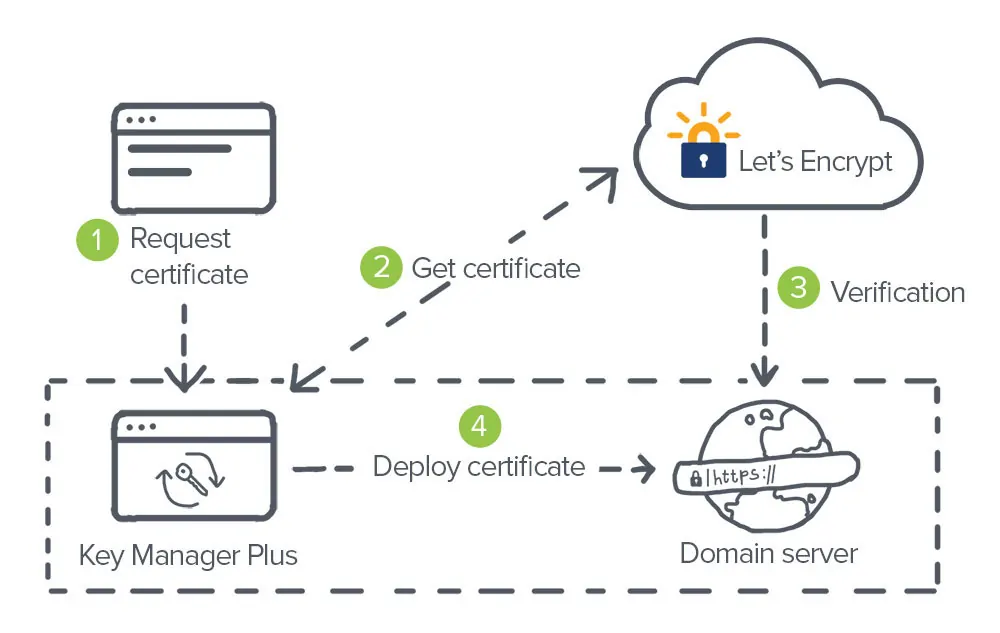

Step 3: Addressing SSL Verification

It often happens that you encounter SSL certificate errors as you scrape those sites; these measures prevent the success of requests. Some sites contain expired certifications, strict SSL layouts or any further verification necessities. You can address such issues by making sure to have proper SSL verification and security maintenance.

The requests library can verify the SSL certificates by default, and if the certificate is rational, the request goes smoothly. Even so, sometimes you are required to set the verification settings when you are getting an SSL-related error.

To handle SSL verification appropriately, you will use the verify=False parameter inside the request.get() to go around SSL certificate validation. Look into the following commands:

import requests

url = “https://example.com”

response = requests.get(url, verify=False)

print(response.text[:500]) # Print the first 500 characters of the response

Remember that as you disable SSL verification, your scarping process becomes open to security threats such as man-in-the-middle attacks, so you should only use this strategy if you have dire needs.

You can utilize a certifi package that offers you up-to-date root certificates so that you don’t need to disable SSL verification. To install this package, follow the command:

pip install certifi

After installation, you can use it inside your requests, for example, like this:

import requests

import certifi

url = “https://example.com”

response = requests.get(url, verify=certifi.where())

print(response.text[:500])

It will make sure to have reliable communication while properly dealing with SSL certificate verification.

Step 4: Extracting The Content

To extract valuable content from that webpage, you will need to utilize BeautifulSoup to parse and extract structured data, as the raw HTML content includes a blend of tags, scripts and text.

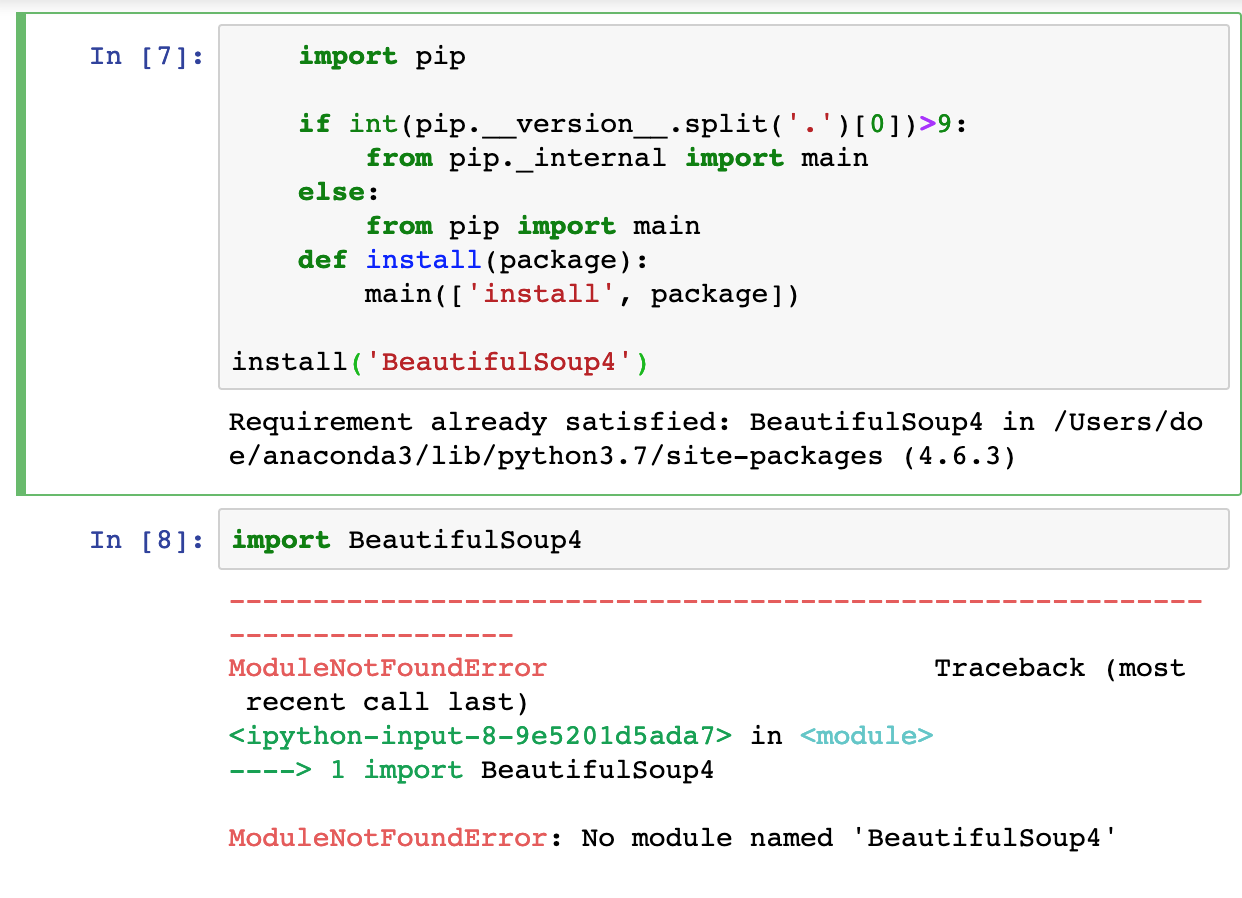

In the first place, you have to install BeautifulSoup using the following command:

pip install beautifulsoup4 lxml

Now look into the following example:

from bs4 import BeautifulSoup

import requests

url = “https://example.com”

headers = {“User-Agent”: “Mozilla/5.0”}

response = requests.get(url, headers=headers)

if response.status_code == 200:

soup = BeautifulSoup(response.text, “lxml”) # Use “html.parser” if lxml isn’t installed

print(soup.prettify()[:1000]) # Print formatted HTML (first 1000 characters)

else:

print(f”Failed to retrieve page. Status code: {response.status_code}”)

Utilize the BeautifulSoup selectors to extract the special content, such as links, paras and headings, like this:

title = soup.find(“title”).text # Extract page title

headings = [h1.text for h1 in soup.find_all(“h1”)] # Extract all H1 tags

links = [a[“href”] for a in soup.find_all(“a”, href=True)] # Extract all links

print(“Title:”, title)

print(“Headings:”, headings)

print(“Links:”, links)

At the end of this step, you will be done with the extraction of content from SSL-protected web pages.

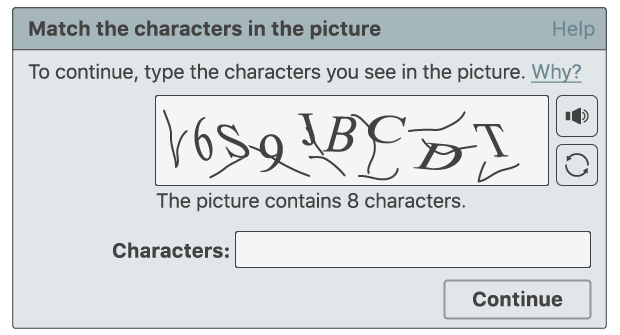

Step 5: Preventing Anti-Scarping Mechanisms

Using anti-scraping mechanisms such as IP blocking, JavaScript rendering, CAPTCHAs, and rate-limiting is commonplace for SSL-regulated sites, which is definitely to avoid data extraction automation. Consequently, you need different scarping techniques to scrape such sites successfully while overcoming detection.

In the first place, you need to rotate user agents that block scraping requests by default. To rotate user-agent headers, you can utilize the following code:

import requests

import random

user_agents = [

“Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36”,

“Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.0.0 Safari/537.36”

]

headers = {“User-Agent”: random.choice(user_agents)}

response = requests.get(“https://example.com”, headers=headers)

Consider using a proxy server if a site is blocking repeated requests from the same IP, just like the following:

proxies = {“http”: “http://your-proxy.com:port”, “https”: “https://your-proxy.com:port”}

response = requests.get(“https://example.com”, headers=headers, proxies=proxies)

Assuming that the website loads its content dynamically using JavaScript, you will utilize Playwright or Selenium. Look into the following example.

from selenium import webdriver

driver = webdriver.Chrome()

driver.get(“https://example.com”)

html = driver.page_source # Extract dynamically loaded content

driver.quit()

All these strategies can significantly help you prevent common security mechanisms used by sites and provide you with an efficient scraping process.

Step 6: Storing The Extracted Information

After extracting data while preventing anti-scrape measures successfully, it’s time to store that data in a structured format to make it available for further use or analysis. The commonly employed data storage formats are JSON, CSV, and databases.

In all of these formats, the most frequently employed format is CSV, which can easily be opened in Excel and facilitates different analysis tools, too.

To store the extracted data in a CSV file, you will use the following command:

import csv

data = [(“Title 1”, “https://example.com/page1”), (“Title 2”, “https://example.com/page2”)]

with open(“scraped_data.csv”, “w”, newline=””, encoding=”utf-8″) as file:

writer = csv.writer(file)

writer.writerow([“Title”, “URL”]) # Header row

writer.writerows(data)

print(“Data saved to scraped_data.csv”)

If you pick the JSON format which is most suitable for storing data containing nested elements, you will use the following code:

import json

data = [{“title”: “Title 1”, “url”: “https://example.com/page1”}, {“title”: “Title 2”, “url”: “https://example.com/page2”}]

with open(“scraped_data.json”, “w”, encoding=”utf-8″) as file:

json.dump(data, file, indent=4)

print(“Data saved to scraped_data.json”)

If you have vast data sets, you will opt for databases like SQLite to make sure you get ideal data management. Look into the following example:

import sqlite3

conn = sqlite3.connect(“scraped_data.db”)

cursor = conn.cursor()

cursor.execute(“CREATE TABLE IF NOT EXISTS pages (title TEXT, url TEXT)”)

cursor.executemany(“INSERT INTO pages VALUES (?, ?)”, data)

conn.commit()

conn.close()

print(“Data stored in SQLite database”)

After storing the data in a suitable format for your utilization, it is prepared for easy retrieval, examination, or organization.

Conclusion

In summary, scraping data from SSL-regulated websites, though not with standard processes, is possible using advanced practices. Python is one of the most widespread programming languages, offering mighty tools like requests, BeautifulSoup, Selenium and more that facilitate smooth data extraction, benefitting various organizations. Since SSL-protected sites contain messages to encrypted and protected data exchange, the data extracted from such sites is more accurate and reliable, which is a valuable aspect for businesses and researchers. As data extractors handle the scraping process properly, using a well-structured approach like the one highlighted in this blog post, scraping SSL-protected sites becomes efficient as well as secure.