How To Monitor Website Changes With Web Scraping And Diffbot

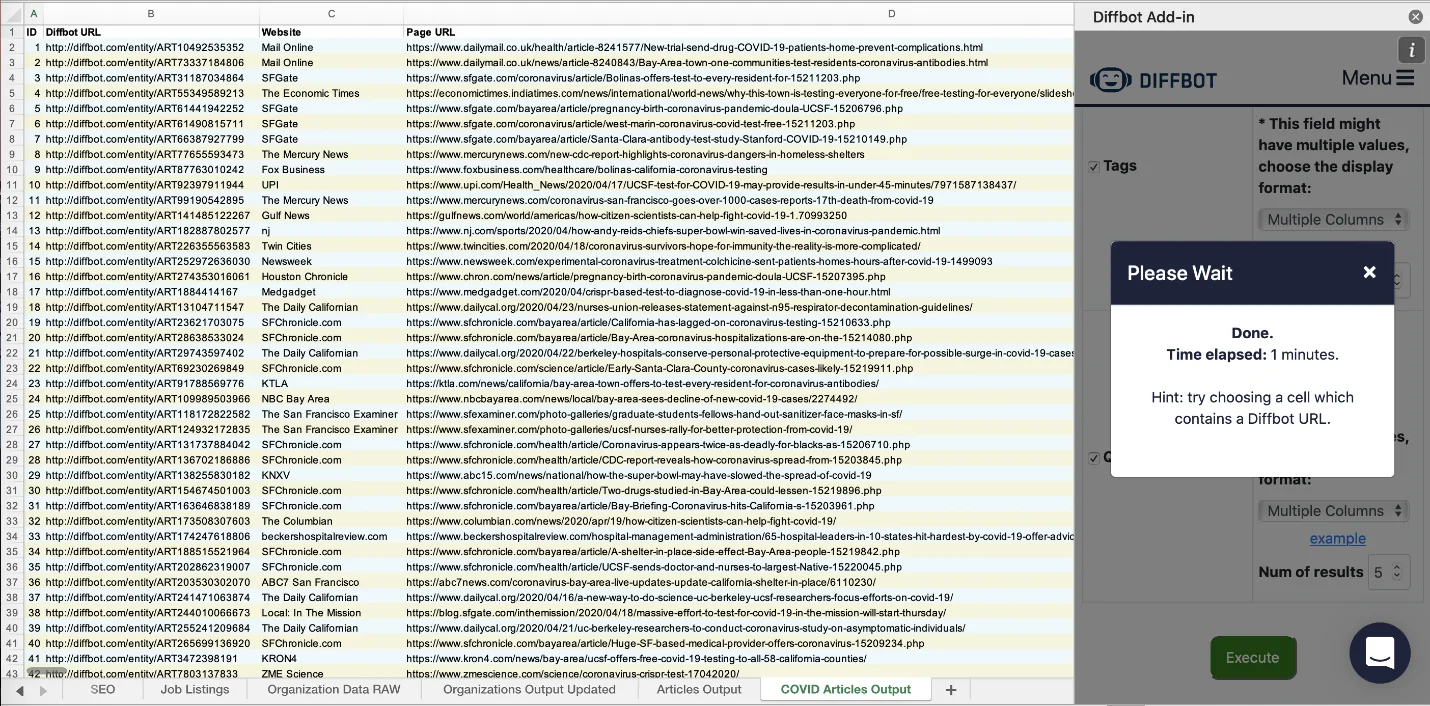

Monitoring website changes is an essential aspect for a multitude of use cases, including the supervision of price fluctuations, keeping an eye on new content, tracking product availability, and generally detecting any dynamic shift. Manually monitoring and comparing website changes can be much more time-consuming and error-prone, thus affecting business decisions. In consequence, the marketing teams and developers can opt for automated solutions like web scraping to get meaningful data with little time and in a more flawless form. Web scraping extracts data from sites automatically to offer users the capability to monitor specific content gradually. Unlike manual checks of changes or updates, web scraping scripts can be used to retrieve the exact content over and over and compare it for alterations. Various compelling tools are available for web scraping, each with its own distinct features. Diffbot is one such tool that effectively transforms web pages into structured data. Its advanced features facilitate users in smoothly gathering the required data, even from intricate pages with no in-depth technical expertise mandated. This blog will go on with a step-by-step process to simplify the use of Diffbot’s API to monitor changes in websites automatically, thus minimizing manual exertions.

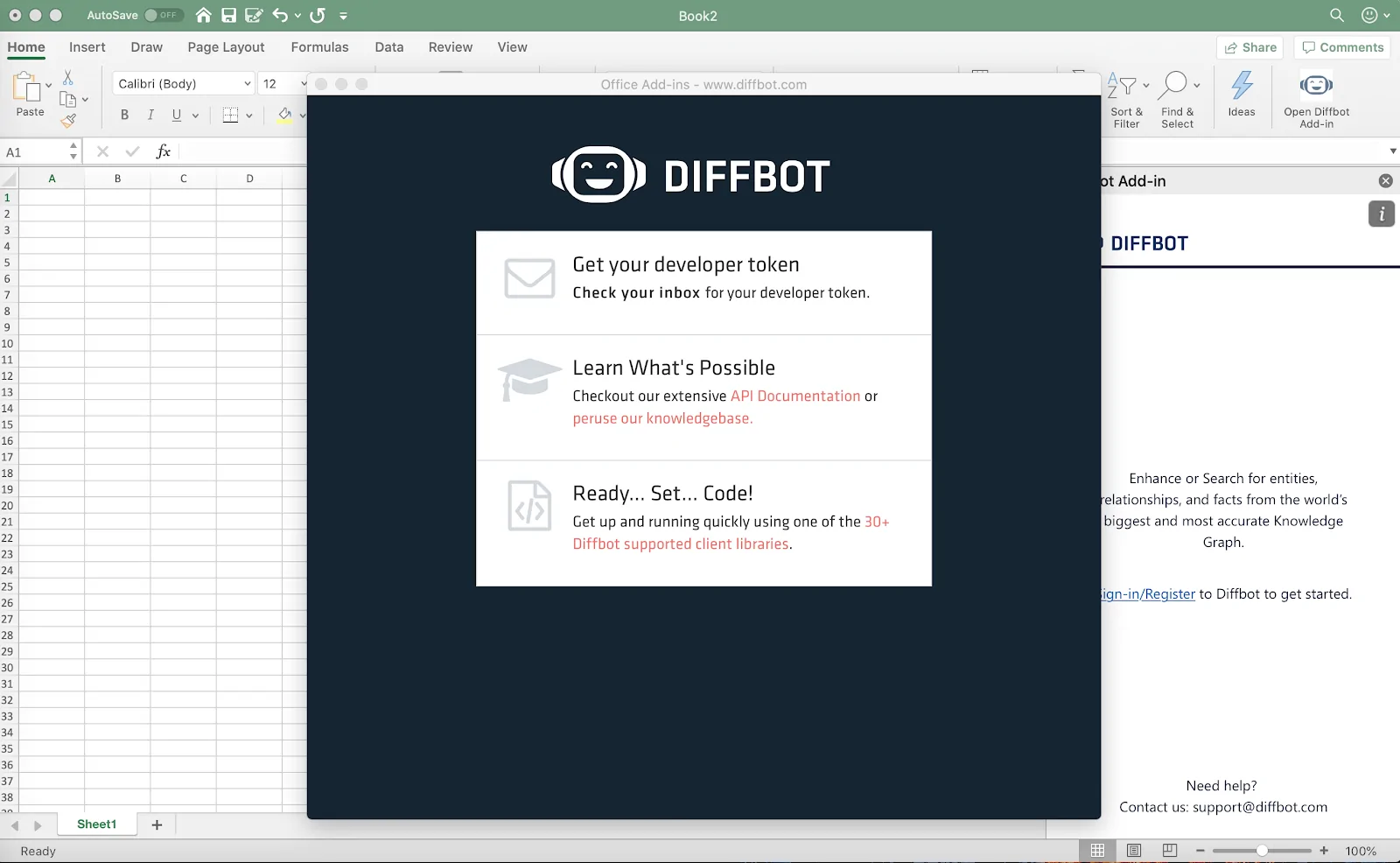

Step 1: Setting Up Diffbot API Access

The primary step in monitoring a site’s changes utilizing Diffbot is to set up access to their API. Begin by creating an account on the Diffbot site. After you are registered, head to the developer area and create your API token. That token is vital because it confirms your requests to the API.

With the token at your disposal, plan your environment for making API calls. In case you are utilizing Python, you will require the requests library to send HTTP requests. Install that library employing pip install requests. After you have installed it, you can compose a script to test your API access. Look into the following example:

import requests

API_TOKEN = ‘your_api_token_here’

url = f”https://api.diffbot.com/v3/analyze?token={API_TOKEN}&url=https://example.com”

response = requests.get(url)

if response.status_code == 200:

print(“API setup successful!”)

else:

print(f”Error: {response.status_code}”)

That fundamental script approves that the API token works and can scrutinize a sample webpage. Store your token safely, and do not share it publicly to preserve access control.

Step 2: Selecting The Target Website

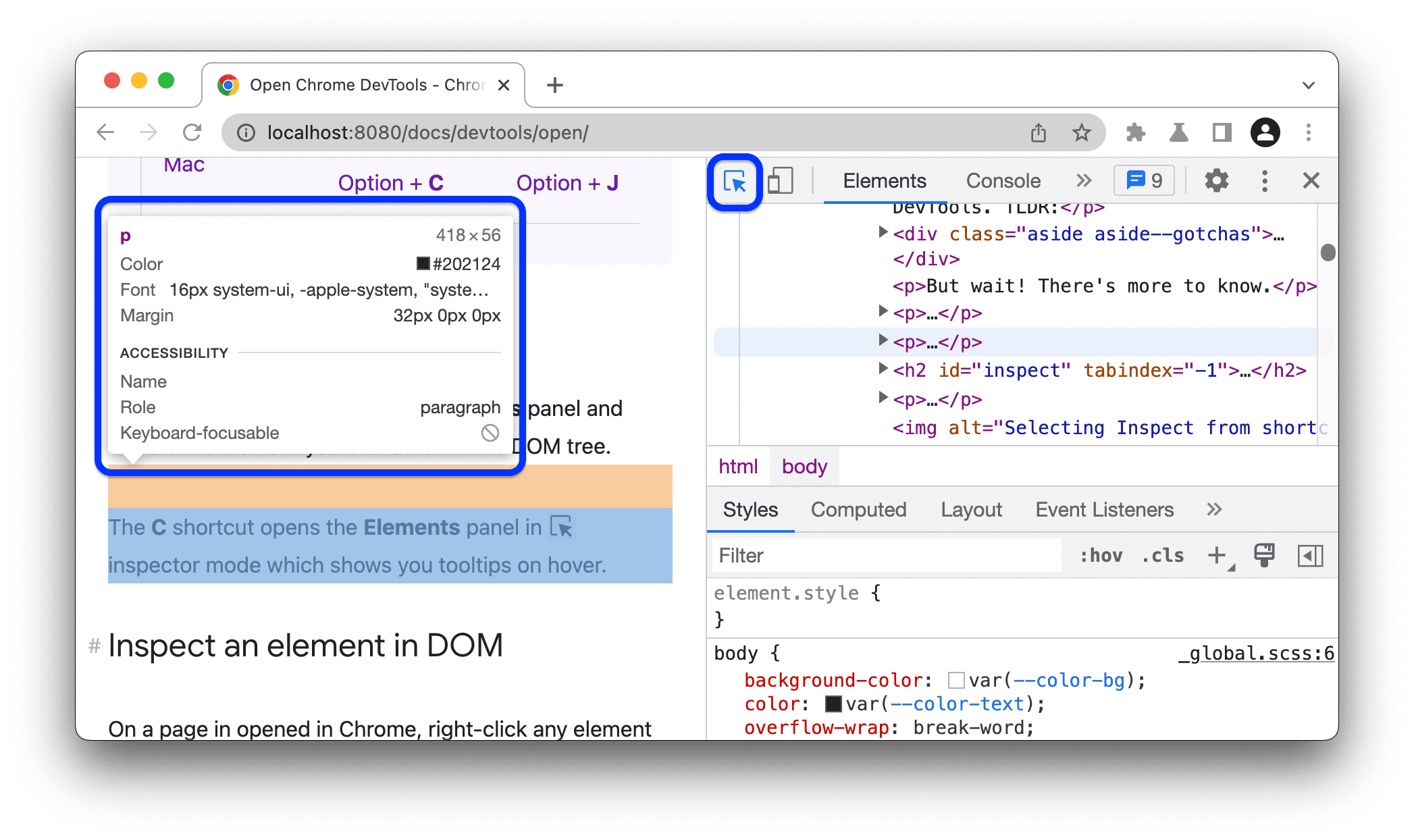

In the second step, you have to choose the site and particular content you need to monitor. Begin by investigating the configuration of the webpage. Utilize browser developer tools by right-clicking on the page and selecting Inspect to examine the elements containing the information of interest, like headlines, prices, or product details. Note the particular HTML tags or attributes, such as id, class, or data-* attributes, which distinctively determine these elements.

Utilizing Diffbot, you do not have to manually create detailed selectors. The API will automatically structure and extract critical data from web pages. Discern the URL of the target page and ensure that Diffbot supports its content. Execute a preliminary query to test like the following example:

target_url = “https://example.com/page-of-interest”

api_url = f”https://api.diffbot.com/v3/article?token={API_TOKEN}&url={target_url}”

response = requests.get(api_url)

if response.status_code == 200:

print(response.json())

else:

print(f”Error: {response.status_code}”)

It will verify that the target page’s data can be extracted effectively. Concentrate on configuring URLs for consistent formatting, particularly if observing multiple pages, like paginated or dynamically created content.

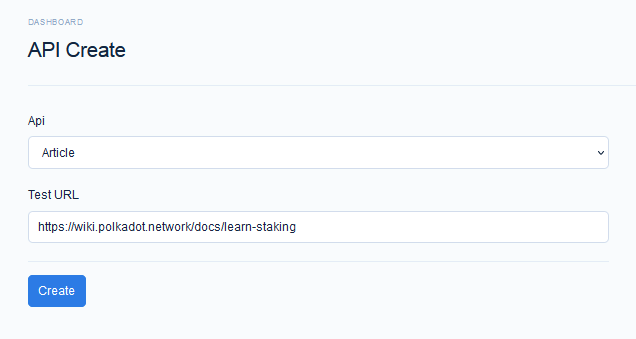

Step 3: Extracting The Key Elements

Once you have identified the target site, utilize Diffbot’s API to extract the key elements. The API offers tools such as Analyze, Article, or Custom API to fetch organized data from web pages. Select the API type per your content. For instance, utilize the Article API for blog content or the Product API for e-commerce data.

Formulate your API call by specifying the target URL and the required elements. For particular data points, like product costs or article titles, parse the JSON response shown by Diffbot. The following is an example in Python:

target_url = “https://example.com/page-of-interest”

api_url = f”https://api.diffbot.com/v3/article?token={API_TOKEN}&url={target_url}”

response = requests.get(api_url)

if response.status_code == 200:

data = response.json()

key_elements = {

“title”: data.get(“objects”, [{}])[0].get(“title”),

“author”: data.get(“objects”, [{}])[0].get(“author”),

“publish_date”: data.get(“objects”, [{}])[0].get(“date”)

}

print(key_elements)

else:

print(f”Error: {response.status_code}”)

That step will extract structured data, making sure that elements of interest are stored for further comparison or processing in subsequent phases. Continue fine-tuning API queries to polish results for dynamic or intricate pages.

Step 4: Comparing Extracted Data With Previously Saved Information

After data extraction is done, the fourth step involves comparing that data with previously saved information to catch changes. Start by storing the scraped information in a structured format, like a JSON file, database, or a basic text file. That will act as a baseline for future comparisons. For instance, save the data after Step 3. Look into the following example:

import json

key_elements = {

“title”: “Sample Title”,

“author”: “John Doe”,

“publish_date”: “2025-01-01”

}

# Save to JSON file

with open(“previous_data.json”, “w”) as file:

json.dump(key_elements, file)

Additionally, to compare with updated data from the website, look into the below example:

with open(“previous_data.json”, “r”) as file:

old_data = json.load(file)

# Example: compare titles

if old_data[“title”] != key_elements[“title”]:

print(“Change detected in the title!”)

else:

print(“No changes found.”)

That process can efficiently identify inconsistencies between the current and past information. Expand this logic to compare different fields or identify more complex shifts. Save the updated data after each monitoring session.

Step 5: Handling The Detected Changes

Once you have detected the changes, it is important to handle them proficiently. You need to create a logging framework to record changes or implement notifications to alarm you in real-time. Logging changes is a straightforward process. Put them in a file or database with a timestamp for tracking. Here is an example:

import datetime

# Log changes to a text file

with open(“change_log.txt”, “a”) as log_file:

change_time = datetime.datetime.now().strftime(“%Y-%m-%d %H:%M:%S”)

log_file.write(f”[{change_time}] Title changed to: {key_elements[‘title’]}\n”)

To get real-time notifications, integrate a service like mail alerts or Slack notifications. For instance, sending an e-mail utilizing Python’s smtplib like the following:

import smtplib

message = f”””\

Subject: Website Update Detected

New Title: {key_elements[‘title’]}”””

# Email setup

server = smtplib.SMTP(“smtp.gmail.com”, 587)

server.starttls()

server.login(“[email protected]”, “your_password”)

server.sendmail(“[email protected]”, “[email protected]”, message)

server.quit()

Select the suitable notification or logging strategy based on your monitoring objectives and response prerequisites, making sure that changes are instantly acted upon or looked into.

Step 6: Automating The Regular Scraping Sessions

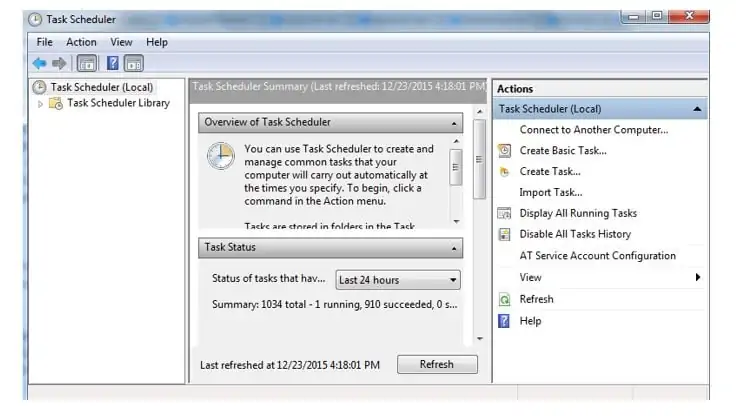

The ultimate step to effectively screen site changes over time involves automating the method by designing regular scraping sessions. Utilize task schedulers such as Cron (Linux/macOS) or Task Scheduler (Windows) for basic automation, or set up a planning library in Python for cross-platform compatibility.

For Python, a library such as schedule can be utilized to operate your scraping script at pre-determined intervals. Look into the following to schedule it:

import schedule

import time

def monitor_website():

# Code to fetch and compare the website data (Step 3 and 4)

print(“Monitoring website for changes…”)

# Schedule the task every hour

schedule.every(1).hour.do(monitor_website)

while True:

schedule.run_pending()

time.sleep(1)

The above code will run the monitor_website function each hour. On the off chance that you need more frequent checks or diverse time intervals, alter the schedule.every() settings.

To execute that script persistently, you can deploy it on a server or a machine that remains powered on. Make sure that the monitoring runs in the foundation to distinguish and go along with changes without manual intervention.

Conclusion

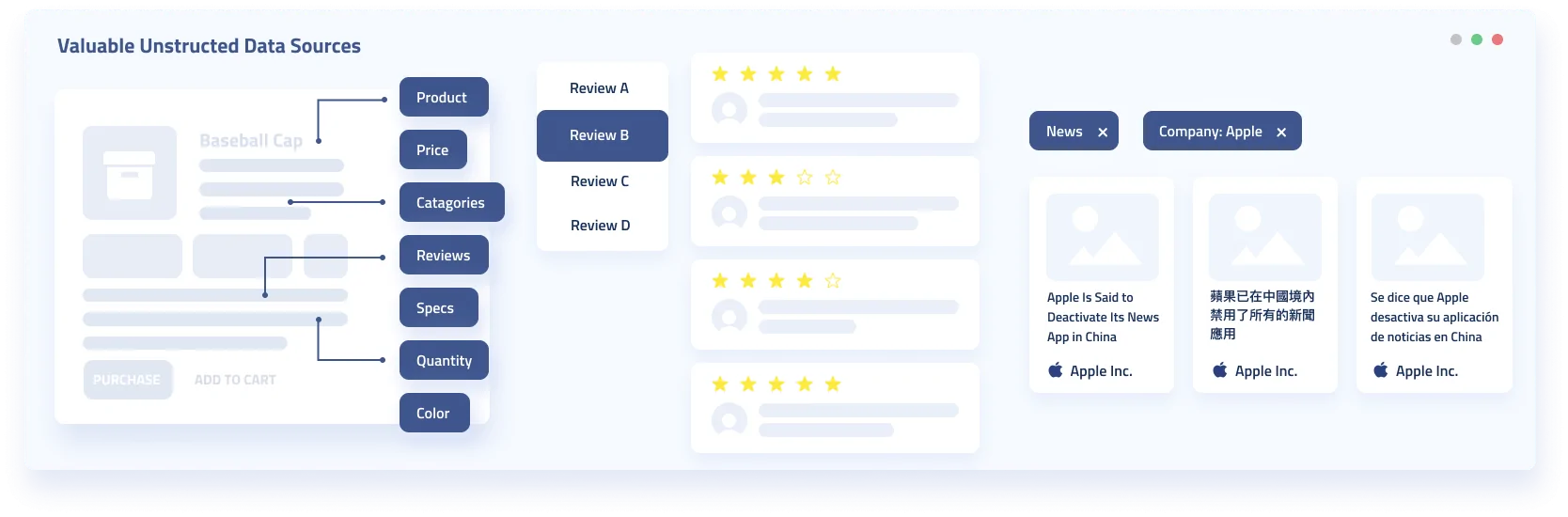

In conclusion, monitoring a website’s updates is critical for staying updated on useful information and shifting trends. Keeping track of website changes can be exhausting, especially if you don’t use appropriate tools, as manual checking is the least efficient and time-consuming approach. Diffbot, as well as other scraping tools, can help automatically identify elements and the layout of a page and then look for common visual clues to track when content on a page changes or to extract specific information for developers to use. In the end, you can access and track data changes on websites faster and more efficiently than with conventional web scraping techniques.