How To Extract Online Directory Listings Using Web Scraping

Online directory listings, holding a large database of firms, are one of the best sources of useful information such as business names, contacts, website links, and more, which can help industries in multiple ways. By extracting data from online directory listings, businesses can get a competitive edge and latent potential while considering specific tools and methods. Since manual data extraction takes much time, web scraping tools like BeautifulSoup, Selenium, and Scrappy automate the process of extracting data from online directory listings automatically and effortlessly. Despite its potential, web scraping faces challenges in its way. The significant ones include pagination, CAPTCHA restrictions, JavaScript rendering, and anti-scraping mechanisms that are mostly executed by websites. Overcoming these challenges and smoothly going through the scraping of online directory listings requires a structured approach like the one highlighted below.

Step 1: Choose A Suitable Directory

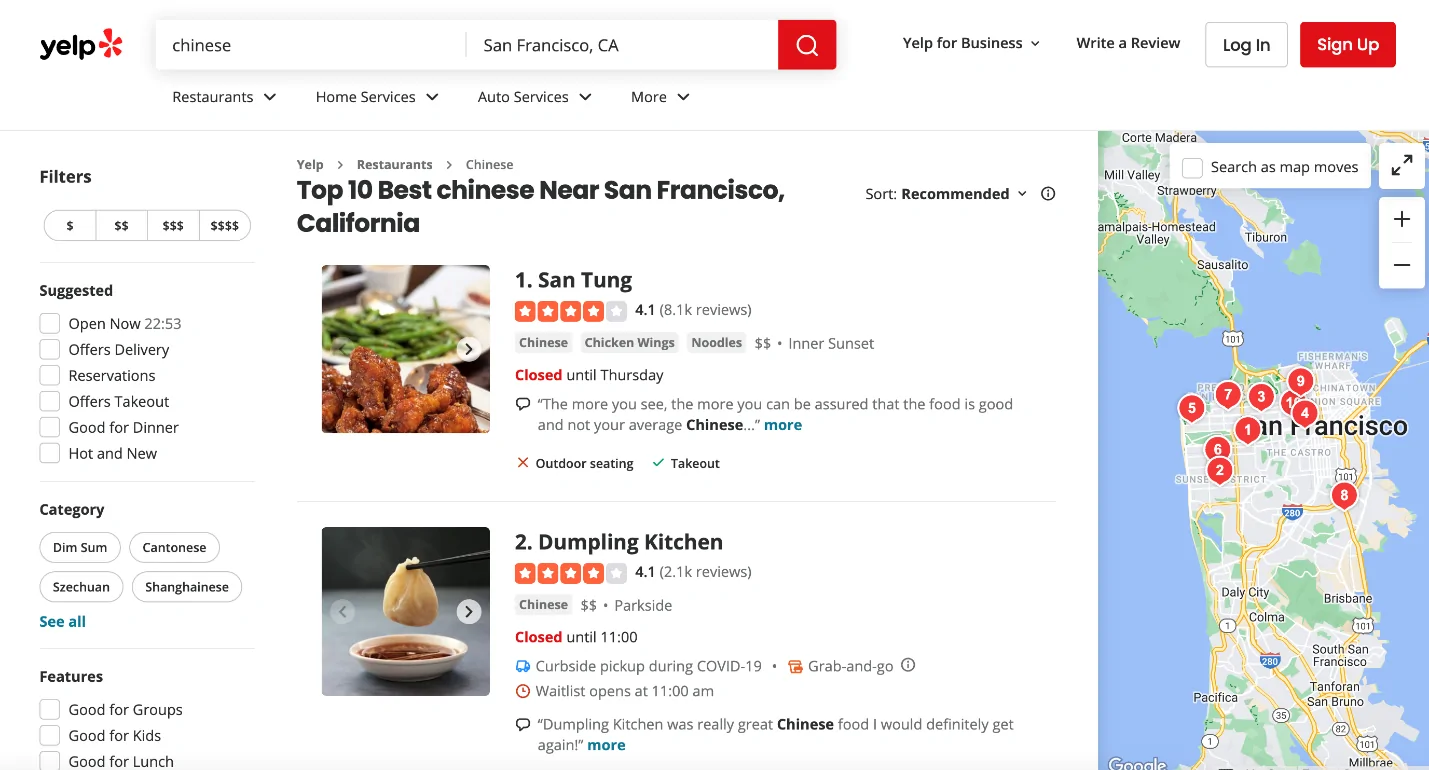

The primary step to extracting online directory listings through web scraping is picking the suitable directory. It will concern the identification of a website that includes the particular data you need, like business titles, contact details, addresses, categories, or reviews. The online directories can incorporate platforms such as Yellow Pages, Yelp, and LinkedIn, as well as industry-thorough directories associated with real estate, healthcare, e-commerce, etc.

After you are done selecting a target directory, examine its content structure. Inspect whether the information is freely accessible or mandates verification. Some directories hold limited access to logged-in clients, while others enforce CAPTCHA or bot-detection agents. If you find that scraping isn’t allowed by the website’s robots.txt file, look into getting an API that is accessible or manually requesting authorization.

After that, scrutinize the type of information accessible. Is the data arranged in a way that permits proficient extraction? Seek clear information points like business names, phone numbers, addresses, and site links. Think about whether the information is loaded dynamically utilizing JavaScript, which requires tools such as Selenium or Puppeteer, or if it is a portion of the static HTML.

A precise target selection confirms a smooth scraping process with the lowest challenges down the line.

Step 2: Analyze The HTML Elements

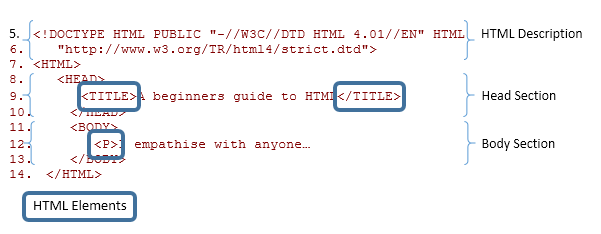

Before you move on to composing any code, it is pivotal to examine how the directory presents its information. It will aid you in identifying the particular HTML elements that contain the data you would like.

Begin by opening the target site in Google Chrome, Firefox, or Edge and utilize Developer Tools using F12 or right-clicking → Inspect. Head to a sample listing and find the pertinent components, such as:

A business title that is usually inside<h1> or <h2> tags.

Address and contact details that are seen within <p> or <span> tags having particular classes.

Links and categories that are generally inside <a> tags or <div> containers.

Review whether the content loads dynamically through JavaScript. If the information is not obvious within the Elements tab but shows up after scrolling or clicking, the website utilizes AJAX or JavaScript rendering. In such circumstances, libraries such as Selenium, Puppeteer, or Playwright may be required rather than formal requests. Besides, you also need to go through pagination in case the listings load through Next Page.

Step 3: Select An Efficient Tool

The two fundamental components to take into consideration while choosing a scraping tool include:

Static and Dynamic Content:

If you find that the directory is loading data as straight HTML, lightweight tools such as BeautifulSoup or Cheerio can parse and extract it proficiently.

If the website employs JavaScript to load content dynamically through AJAX requests, tools such as Selenium, Puppeteer, or Playwright are way better conformed as they can connect with the page and render JavaScript.

Data Volume and Scraping Speed:

If you opt for small-scale extractions, BeautifulSoup with requests is effective and simple to utilize.

On the other hand, with expansive datasets mandating high-speed extraction, Scrapy will offer a strong, adaptable arrangement with built-in requests dealing with caching and proxy authorization.

If you wish to interact with forms, logins, or CAPTCHA handling, Selenium or Puppeteer can automate browser activities.

Picking the proper tool guarantees effective data extraction, whereas taking care of site-related challenges such as JavaScript rendering, pagination, and anti-bot securities.

Step 4: Compose A Script

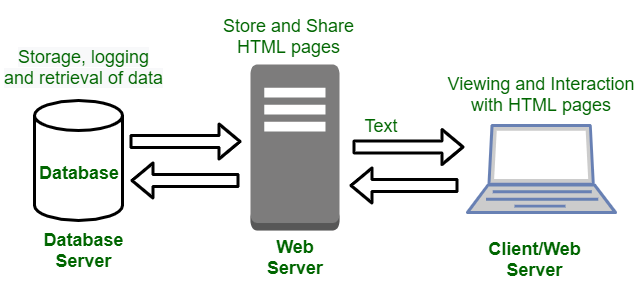

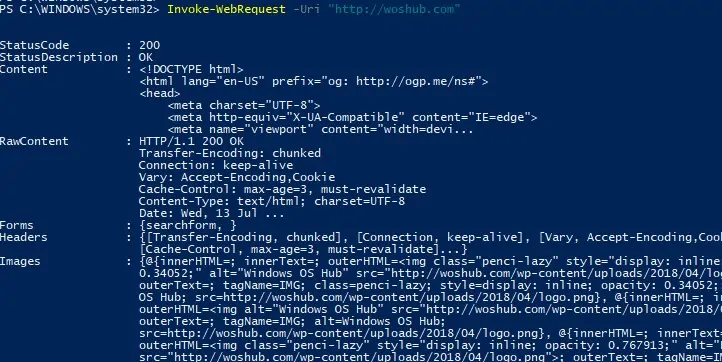

After you are done choosing the proper tool, the following step is to type in a script that will request web pages, parse the HTML, and extract the desired information. The procedure is based on whether the website loads information statically or dynamically.

If working on static sites, utilize Python’s requests library to fetch the page source; look into the following example:

import requests

from bs4 import BeautifulSoup

url = “https://example-directory.com/businesses”

headers = {“User-Agent”: “Mozilla/5.0”}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, “html.parser”)

In the case of JavaScript-heavy sites, Selenium or Puppeteer can dynamically render pages dynamically. You can use the following command:

from selenium import webdriver

driver = webdriver.Chrome()

driver.get(“https://example-directory.com/businesses”)

html = driver.page_source

Discover the right tags utilizing Developer Tools and extract content. Just like the following:

for listing in soup.find_all(“div”, class_=”business-listing”):

name = listing.find(“h2”).text.strip()

address = listing.find(“p”, class_=”address”).text.strip()

print(name, address)

A rightful script guarantees effective data extraction while evading being detected.

Step 5: Address Pagination And Anti-Scraping Mechanisms

Numerous online directories spread listings over different pages. To scrape all information, you should address pagination and anti-scraping mechanisms viably.

In dealing with pagination, various directories utilize the Next Page buttons where the URL shifts with page=2, page=3, etc.

Another mechanism is infinite scrolling, which loads data dynamically through AJAX.

To deal with paginated URLs, you will loop through page numbers using the given command:

base_url = “https://example-directory.com/businesses?page=”

for page in range(1, 6): # Adjust range based on the total pages

url = base_url + str(page)

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, “html.parser”)

# Extract data here…

Alternatively, to deal with infinite scrolling, Selenium can simulate scrolling just like the following:

from selenium.webdriver.common.keys import Keys

driver.get(“https://example-directory.com/businesses”)

for _ in range(5): # Scroll multiple times

driver.find_element(“body”).send_keys(Keys.PAGE_DOWN)

To bypass anti-bot measures, you will need to execute the following strategies:

You will use the following command to rotate Headers and User-Agent:

headers = {“User-Agent”: “Mozilla/5.0”}

To introduce delays between requests, utilize this command:

import time

time.sleep(2) # Pause between requests

If required, you may employ proxy servers and CAPTCHA solvers to successfully deal with pagination and anti-scraping, guaranteeing complete and undetected extraction of data.

Step 6: Store The Data

Once you are accomplished with the extraction of the directory listings, you have to store the data in an organized format for effortless access and inspection. The most standard formats incorporate CSV, JSON, or databases such as MySQL or MongoDB.

CSV is perfect for tabular data that can be opened in Excel or Google Sheets. To save data as a CSV File, use the following command:

import csv

data = [(“Business Name”, “Address”, “Phone”)] # Header row

for listing in extracted_data:

data.append((listing[“name”], listing[“address”], listing[“phone”]))

with open(“directory_listings.csv”, “w”, newline=””, encoding=”utf-8″) as file:

writer = csv.writer(file)

writer.writerows(data)

Secondly, JSON is valuable for organizing and storing progressive data. To save data in this format:

import json

with open(“directory_listings.json”, “w”, encoding=”utf-8″) as file:

json.dump(extracted_data, file, indent=4)

Another way of storing data is by inserting it into a database, which is most suitable for large-scale scraping. You will need to store information in MySQL or MongoDB for simple query and retrieval. You can use the following command for that purpose:

import sqlite3

conn = sqlite3.connect(“listings.db”)

cursor = conn.cursor()

cursor.execute(“CREATE TABLE IF NOT EXISTS businesses (name TEXT, address TEXT, phone TEXT)”)

for listing in extracted_data:

cursor.execute(“INSERT INTO businesses VALUES (?, ?, ?)”, (listing[“name”], listing[“address”], listing[“phone”]))

conn.commit()

conn.close()

Conclusion

Scraping online directory listings is a productive method, and it is frequently used by corporations and data analysts to obtain the information they need, which may aid in making profitable decisions. Some of the greatest sites to get free leads for sales include online company directories like MLS listings, Google Maps, LinkedIn, association websites, and Yellow Pages directories. However, manually copying and pasting data from these websites takes a lot of time and work. The steps mentioned in the above blog post will enable organizations and individuals to scrape worthwhile data from online directory listings evenly.