How Search Engines Crawl & Index Data?

The blog is a treat for all those who desire to learn how search engines crawl websites and index web pages. Various popular methods are discussed that assist search engines to locate the new and updated version of content with the fastest speed. Before learning how search engines operate and moving forward to optimize websites is akin to publishing your first ever novel but without knowing to write up prerequisites!

Certainly, the crawling and indexing process will prove easier if you are aware of the core elements beforehand. Hence, let us begin by understanding how search engines work to completely optimize them for our business benefits. Not Bing nor Google or any other major search engine provides businesses with organic listings. Organic results are the means to an end and do not generate revenue for them directly. To put it in simpler words, Bing and Google (and the other popular search engines) are advertising engines that draw users to respective properties with organic listings. You must be thinking why does this even matter? Google adds a fourth search result that is paid to commercial queries Google offers featured snippets such that you don’t leave Google.com to find the answer to your query. Furthermore, it allows CTR (click-through rates) of organic results.

How Search Engines Work Today: The Series

Let us dive into the more crucial part of why Google provides organic results? The nuts and bolts of how the worldwide search engine operates include –

- Machine Learning (ML)

- Crawling and Indexing

- Algorithms

- User Intent

Indexing

The story begins with indexing. It is essential to refer various web page content to the search engine. It allows Google to add web pages content successfully. When you create a new web page on your business website there are various alternative ways it can be indexed. High-profile search engines like Google has built-in crawlers that follow links provided your website s already indexed to link the newly acquired content from within your website. In this way, Google will itself discover it and add your website to its index. In case you want the Googlebot to get to your web page faster you must find timely content to suit your web page and let Google know about it.

Boost Your Content with Keyword Intent Analysis

Semrush’s Keyword Intent Metric has made keyword alignment with the right content and right audience a lot easier than it previously was. There are numerous reasons where you require faster methods to optimize critical pages especially when you have adjusted the title page or description section to improve click-throughs. By timely optimizing the web pages you can know when the click-throughs are picked up and displayed in the SERPs. Hence, you are better able to understand at what point does the measurement of improvement begins. In such cases, there are other proven methods you can make use of, such as:

1. XML Sitemaps

Typically, it is a sitemap that is submitted to any search engine let us suppose Google through Search Console. It provides search engines with a list of all web pages available on your website along with some extra details like when was the last time the content was modified etc. The method is certainly recommended to index information right away but remember the method is not particularly consistent in providing results.

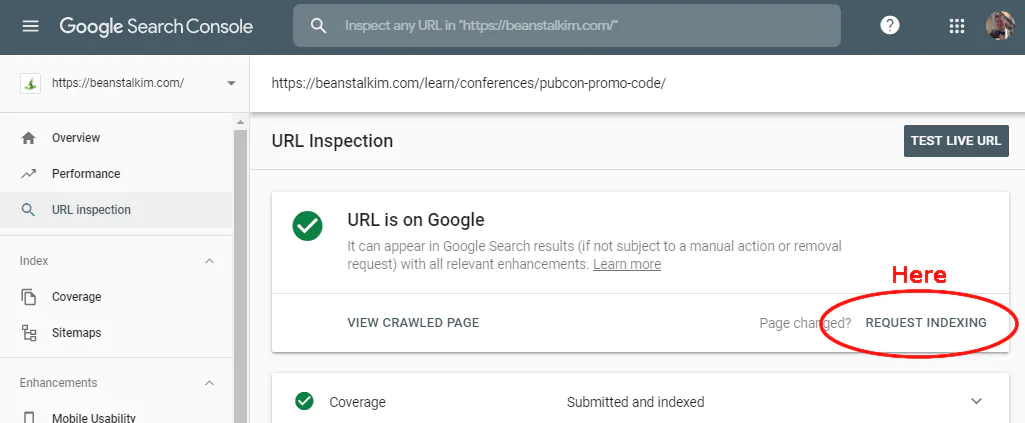

2. Request Indexing

You can “Request Indexing” in Search Console as well. You simply begin by clicking on the search fled presently on the very top which reads by default. Once you find “Inspect & URL in domain.com”, you enter the URL from where you want the content to be indexed and then press “Enter”. If the web page is already known to Google you will just need to present it with content available on it. The important part here is whether the page is indexed or not. The method is a win to discover goof content and to request Google to understand recent changes made in your web pages. For convenience see the circled red section in the reference image attached below –

In a matter of seconds, you will be able to search for new content or the URL in Google. You can also measure the new content or change that is picked up.

3. Host Your Content on Google

Certainly, crawling websites to index them is a painstaking process that consumes both time and resources. One way is to host your web page content directly with them. Or you can provide Google with direct access to content via APIs, XML feeds, etc., and then unplug the information from our design.

A Google’s mobile app platform i.e. “Firebase” gives Google the authority to directly access the app content bypassing any need for an algorithm to figure out how to capture information. Enabling search engines to index information immediately without doing much on your part is certainly a better present and an acknowledgeable future!

The properly indexed content can then be saved into the desired format based on the accessing technology.

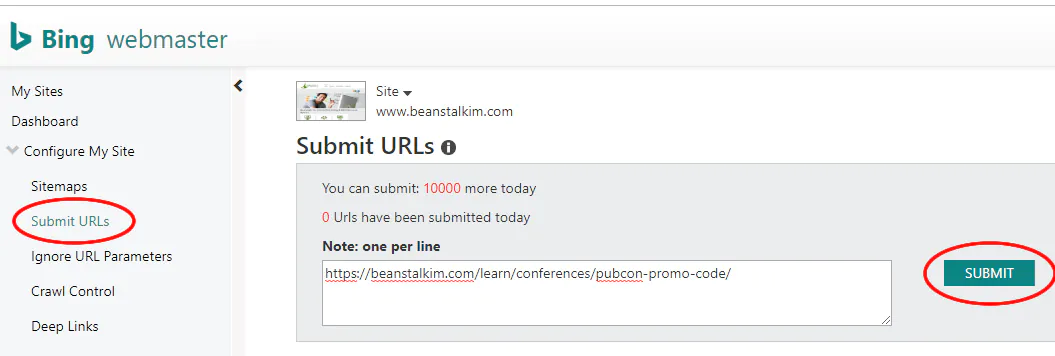

4. And Bing, Too!

To get your web page content indexed and updated quickly via Bing you will require a Bing Webmaster Tools user account. If you still don’t have one you can get yourself registered right away. The information provided will guide you to better assess your weaknesses and help you improve your website rankings on Google, Bing on any other search engine. By following these footsteps you will enjoy a better user experience. To get more and more content indexed you require to click: My Site > Submit URL(s)

Here you enter the respective URL(s) that you want to index, afterwards click “Submit” to lock in to get started.

That’s pretty much everything you need to get started with indexing using search engines. However, there is always an option to reach out to a professional to get things straightened out for you and save your precious time.

How ITS Can Help You With Data Abstraction Service?

Information Transformation Service (ITS) provides you with excellent Data Abstraction Services and other related options for a better data experience. We consider customers’ ease and satisfaction as its topmost priority. Minimizing workload and clearing out errors from large databases can serve your projects with full potential daily. Our professional Data Abstraction Service is all about encapsulating large and incomprehensible data chunks into concise data segments that can be fit into minimum space. However, the ITS Team doesn’t compromise upon the standard set for information quality while adhering to size metrics and trying to present the maximum amount of relevant information within the minimum space required.

With 30 years of prolonged satisfactory service-providing experience, you can count on us for all your big data projects. If you are interested in ITS Data Abstraction Service, you can ask for a free quote!