Challenges to Scrap an eCommerce Website

These days every eCommerce business company is ubiquitous because of the big benefits data provides in terms of effective business policy formulation and to sideline competition of other eCommerce companies. Web Scraping Services is the ultimate assignment to solve all your data extraction problems as well as entice clients towards your new projects. Nowadays we are all connected to the internet and socialization is now deeply rooted within our brain cells. Hence, such a digital and personalized platform can cater to all your perspectives and requirements when used in the right way. At the end of this blog, you will be able to consider Web Scraping as an essential tool to give your enterprise a new life and energy that it lacked.

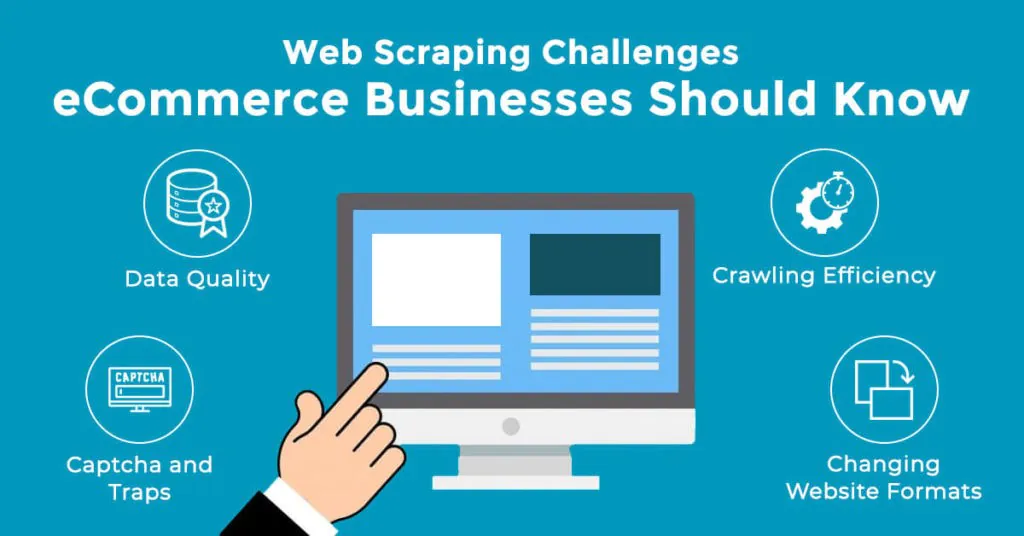

Web data is increasingly utilized for daily business ventures from across the globe. It fuels all types of competitor analysis and research relating to price shifts as well as new product stock. For all eCommerce websites, certain aspects are essential to consider such as reliability and validity of data, also the frequency under which the web data is required to comprehend an ongoing task. In this blog, our main focus is centered upon the challenges faced during web scraping and how can best web scrapers overcome such obstacles to fulfill distortion-free web scraping needs!

Challenge #1 – Number of Requests Made

There are millions to gazillion requests made to the companies each day, to handle such a huge number of requests companies must own thousands of IPs in the proxy pool to tackle such huge volumes. Proxy types must include location, residential, and data centers to scrape the required information when required. The scraping of data in large quantities becomes way easier. However, managing such a large quantity of proxy tools can be a daunting task. The level complexity can be over-ruled by applying a level intelligence layer to your proxy logic. The more sophisticated your proxy management layer is the more hassle-free is your proxy pool.

Challenge #2 – Building a robust intelligence layer

When scraping any website’s web page at a smaller level, you can do it with simple proxy management infrastructure, specially designed web crawlers, and a larger proxy pool. While forming an intelligence layer, you are safekeeping all your data from external viruses. At a larger scale, the ideology shifts very quickly with challenges emerging as mentioned below:

Ban Identification

Your solution for proxy needs to be set in such a way to detect any type of ban occurring in troubleshoots and suggests means to dissolve such underlying issues like blocks, ghosting, redirects, and captchas, etc.

Retry Errors

If in any case scenario, your proxy experiences error, timeouts, or bans then you need to retry the request with different proxies each time.

Request Headers

Managing all user agents and cookies is also very crucial for a healthy web crawl.

Control Proxies

There are various scarping tasks in which you need a session with proxy to figure your proxy pool.

Add Delays

There are also instances of randomly incurring delays and changing of request modes which can have a serious impact upon scraping the websites. Most of these random delays are automatic.

Geographical Targeting

It is better to configure your pool such that only a few proxies can be employed for selected websites scraping.

Challenge #3 – Precision/Accessing the data you want

You might have noticed that certain eCommerce shopping websites displayed product pricing lists and product aspects differ depending upon the location of the user. For acquiring accurate data the user then requests from different viewpoints or zip codes. Hence, you can tell by just reading that it takes a lot of effort in unraveling the true product information from any website. The proxy pool consists of many correct proxies which precisely target the locations for attaining databases. At the lowest, it is appreciably good enough to only use certain related proxies for specific web scraping needs. Also, the process can outnumber and become complex as the intensity of the web scraping project demands double. Therefore, an automated approach is key to scrape websites on a larger scale.

Challenge #4 – Reliability and data quality

The most important aspect while developing a proxy is solution management for larger eCommerce website scraping requirements. The tactic proves robust as well as reliable for a long-term high-quality data analysis. Oftentimes, it happens so that big eCommerce enterprises are extracting very critical pieces of information of businesses for competitive analysis of their products. This results in disruptions and serious other issues regarding the validity, reliability, and application. Our concern must be with the data feed health. Even a disruption for some hours can prove contagious and can yield outdated data other than product pricing for the very next days.

The other recurring issues involve inaccurate requests for product data which can be misleading at times. This proves to be a mind-boggling headache for all the data scientists out there. As a response, there always a chance for error in the acquired data by such companies. Also, when there is a seed of doubt planted beforehand, no better decision can be made by the companies to boost up their product sales. Hence, robust proxy management comes a long way with a built-in automated QA process. It not only provides the best backend support but also resolves all your insignificant troubleshoot problems in one go, now all you need to do is to enjoy your data feed results with confidence.

The best solution for enterprise web scraping

The best solution for all your eCommerce web scraping challenges is to manage proxies for your relative web scraping projects. By doing so, you can easily and effectively overcome all the adversities that you face. In actuality, enterprise-built web scarpers operate upon two distinct options when building their proxy infra-structure for specific trails.

- Building the whole enterprise infrastructure in-house.

- Employing a solo endpoint proxy solution to deal with arising challenges and complexities for technical assistance.

Build in-house

Building an in-house robust proxy management solution takes good care of all the essential IP rotation, session management, blacklisting logic, and request throttling at an excellent level to prevent any type of spiders from getting blocked.

Single endpoint solution

Single End Point Solution is highly recommended to go with the proxy provides for a single endpoint configuration. It helps in scaling research-intensive data and to develop an internal proxy infrastructure for efficient maintenance of product data.

How ITS Can Help You With Web Scraping Service?

Information Transformation Service (ITS) includes a variety of Professional Web Scraping Services catered by experienced crew members and Technical Software. ITS is an ISO-Certified company that addresses all of your big and reliable data concerns. For the record, ITS served millions of established and struggling businesses making them achieve their mark at the most affordable price tag. Not only this, we customize special service packages that are work upon your concerns highlighting all your database requirements. At ITS, our customer is the prestigious asset that we reward with a unique state-of-the-art service package. If you are interested in ITS Web Scraping Services, you can ask for a free quote!