How To Scrape Dynamic Content With Scrapy-Splash

Various websites today rely on JavaScript for data rendering, which makes a potent webpage structure, making it challenging when it comes to scraping such content. Websites with dynamic content structures are unlike static pages, which present all essential data in the initial HTML. To scrape data from dynamic sites, tools like Scrapy-Splash are an ideal choice for smoothly extracting content from JavaScript-based arrangements. Scrapy-Splash is a combination of the Scrapy framework with Splash, which is a headless browser that is capable of rendering dynamic data with JavaScript. Employing a Scrapy-Splash integrated workflow, individuals can effectively scrape sites that rely on AJAX calls or user interactions for content loading. The central aspects of scraping dynamic content with Scrapy-Splash involve sending rendering requests to Splash and parsing the thoroughly rendered HTML for required data extraction. The Scrapy-Splash integration facilitates simple tasks like handling user agent rotation, cookies, and headers, as well as complicated chores like CAPTCHA solving and waiting for events to load on a page. This blog will further go on with the step-by-step process of scraping dynamic content with Scrapy-Splash.

Step 1: Setting Up Scrapy-Splash

To set up Scrapy-Splash, you need to prepare your Scrapy project to work with Splash, which is a headless browser to render JavaScript. Begin by installing the essential library. You can Utilize the command mentioned below:

pip install scrapy-splash

After that, update your Scrapy project’s settings.py file to facilitate the Splash middleware and assign Splash as a downloader handler. Include the configuration as mentioned below:

SPLASH_URL = ‘http://localhost:8050’

DOWNLOADER_MIDDLEWARES = {

‘scrapy_splash.SplashCookiesMiddleware’: 723,

‘scrapy_splash.SplashMiddleware’: 725,

‘scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware’: 810,

}

SPIDER_MIDDLEWARES = {

‘scrapy_splash.SplashDeduplicateArgsMiddleware’: 100,

}

DUPEFILTER_CLASS = ‘scrapy_splash.SplashAwareDupeFilter’

HTTPCACHE_STORAGE = ‘scrapy_splash.SplashAwareFSCacheStorage’

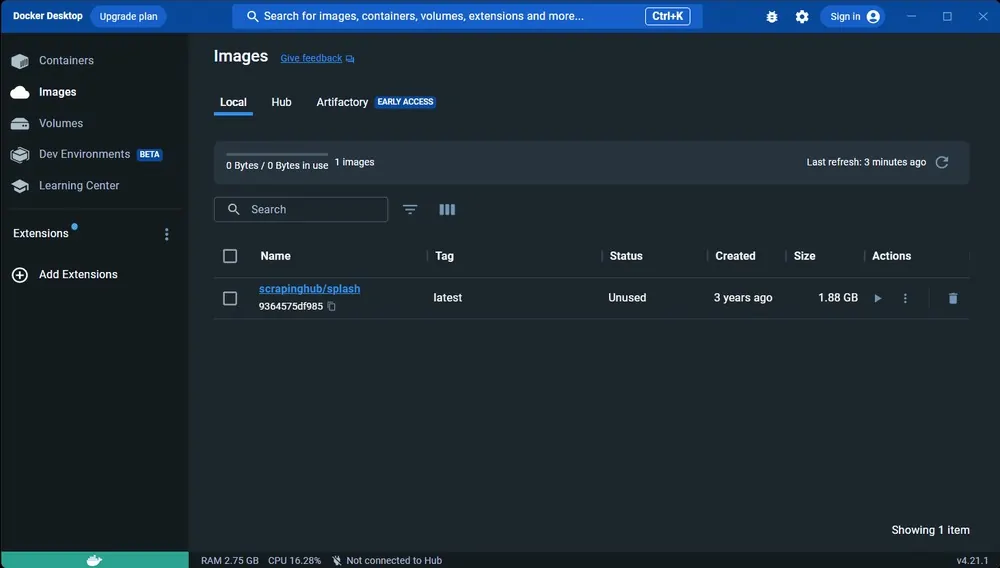

In the end, make sure you have installed Docker to execute the Splash instance. Drag and initiate the Splash Docker container using the command:

docker run -p 8050:8050 scrapinghub/splash

That step will prepare your project for dynamic content rendering and permit Scrapy to interact with the Splash server to deal with JavaScript-heavy pages.

Step 2: Installing And Configuring Splash

In the second step of scraping dynamic content with Scrapy-Splash, you have to install and configure Splash, which can render JavaScript-driven web pages. Splash operates as a server and can be installed utilizing Docker for simplicity and adaptability.

Begin by installing Docker on your device if you don’t have it already. After installing Docker, you need to pull the Splash Docker image by running the following command:

docker pull scrapinghub/splash

After that, initiate the Splash server utilizing the following code:

docker run -p 8050:8050 scrapinghub/splash

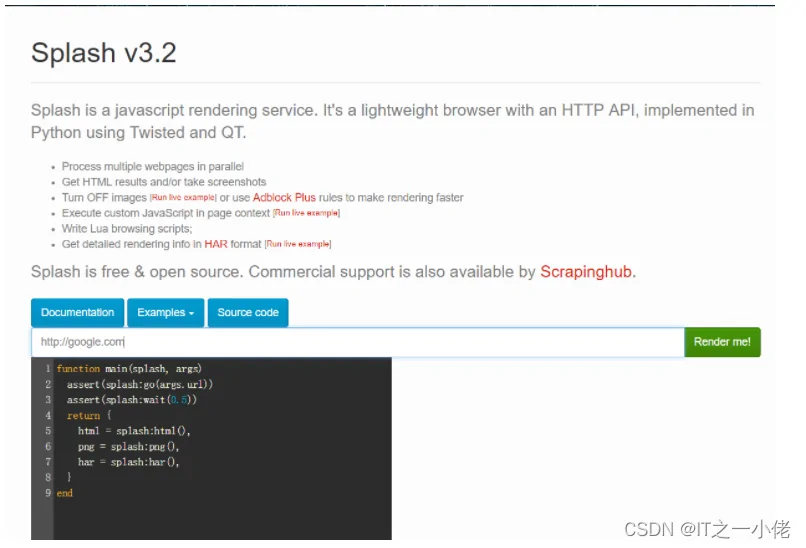

The above command will launch Splash on port 8050. You can confirm that Splash is operating by opening http://localhost:8050 in your browser. That ought to show the Splash web interface.

Next, you have to integrate Splash with Scrapy by adding its URL to the project’s settings.py file:

SPLASH_URL = ‘http://localhost:8050’

Once done with Splash installation and configuration, your Scrapy project can now send requests to this server for JavaScript rendering, empowering you to manage dynamic content proficiently.

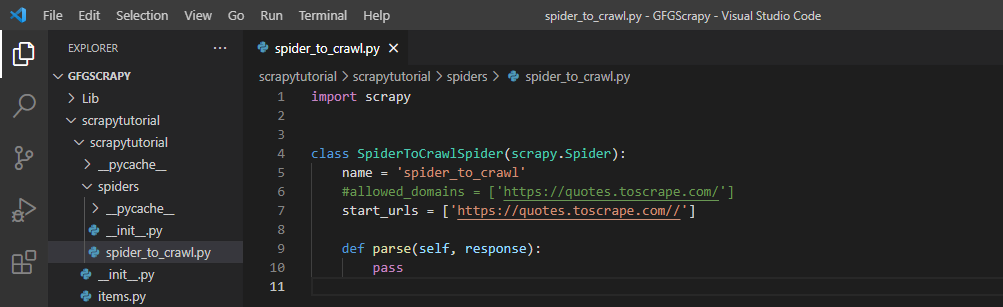

Step 3: Defining Splash Requests Within Your Scrapy Spider

Once you are done setting up Splash and Scrapy-Splash, the third step involves defining Splash requests within your Scrapy spider. Those requests are to be sent to the Splash server to render JavaScript on the target web page before extracting data.

To begin with, in your spider file, import SplashRequest from scrapy_splash using the following:

from scrapy_splash import SplashRequest

After that, inside your spider’s start_requests() or another method, you need to utilize SplashRequest to send a request to the Splash server. For instance, if you are scraping a webpage with dynamic content, you’ll send a request as follows:

def start_requests(self):

url = ‘http://example.com/dynamic-page’

yield SplashRequest(url, self.parse, args={‘wait’: 2})

In the above illustration, the args parameter indicates the arguments handed to the Splash server, like the time to wait (wait: 2) before the page rendering. It will guarantee that JavaScript has sufficient time to run.

You may also pass other arguments, such as lua_source, if you want custom JavaScript actions to communicate with the page before rendering, like clicking components or dealing with AJAX calls. The SplashRequest permits you to handle these dynamic behaviours as you scrape.

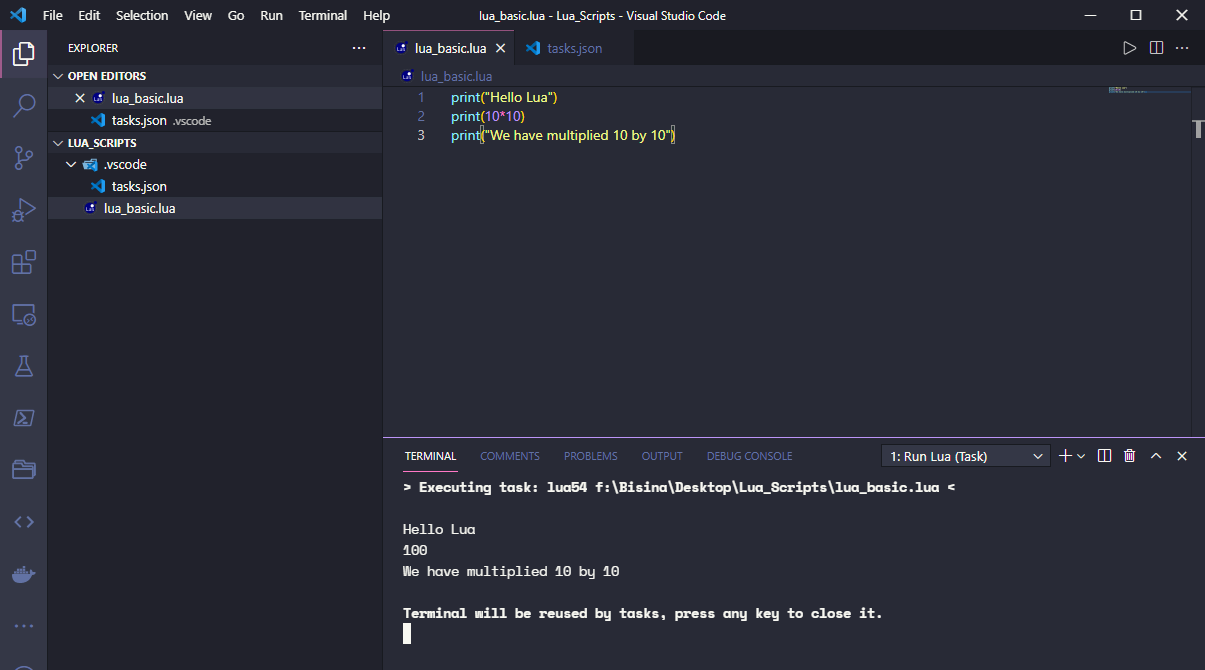

Step 4: Designing Lua Scripts For JavaScript Rendering

When you need to scrape highly dynamic pages with complex JavaScript activities, you may have to make custom behaviours for proper rendering of content before extracting. Scrapy-Splash permits you to connect with pages via Lua scripts, which are run by Splash before the content is rendered. These scripts authorize you to control activities like clicking buttons, waiting for elements to load, or connecting with AJAX calls.

The following is a simple illustration of a Lua script that holds up for an element to emerge on a page before rendering it:

function main(splash)

splash.private_mode_enabled = false

assert(splash:go(‘http://example.com/dynamic-page’))

assert(splash:wait(2))

local element = splash:select(‘div#content’) — Waits for content to load

assert(element)

return splash:html() — Returns the HTML of the page

end

In the above example, the script instructs Splash to visit a page, wait for 2 seconds, check for a specific HTML element (div#content), and, after that, return the thoroughly rendered HTML.

To utilize this Lua script with SplashRequest in your Scrapy spider, utilize the following:

def start_requests(self):

url = ‘http://example.com/dynamic-page’

lua_script = ”’

function main(splash)

splash.private_mode_enabled = false

assert(splash:go(‘http://example.com/dynamic-page’))

assert(splash:wait(2))

return splash:html()

end

”’

yield SplashRequest(url, self.parse, meta={‘splash’: {‘lua_source’: lua_script}})

That Lua script strategy is especially valuable once you require an adapted command over the JavaScript rendering process.

Step 5: Parsing The Rendered HTML Response

After Splash is done rendering the dynamic page and executing any vital JavaScript or interactions through Lua scripts, you will parse the rendered HTML response to extract the desired information. That step includes utilizing Scrapy’s effective selectors to extract data from the fully loaded page the same way you’d with a static page.

After sending the SplashRequest, you will define a parsing strategy In your Scrapy spider, where you can extract the significant content. The following is an example:

def parse(self, response):

# Extract title of the page

title = response.xpath(‘//h1/text()’).get()

# Extract product information or other dynamic content

products = response.xpath(‘//div[@class=”product-item”]’)

for product in products:

name = product.xpath(‘.//h2/a/text()’).get()

price = product.xpath(‘.//span[@class=”price”]/text()’).get()

yield {

‘name’: name,

‘price’: price

}

In that example, the parse strategy extracts the page’s title and iterates through each item on the rendered page, collecting particulars like name and price.

By utilizing Scrapy selectors such as .xpath() or .css(), you’ll be able to target the energetic content from the rendered HTML, including text, links, images, and other components that were loaded through JavaScript. If the page has complex JavaScript interactions, the hold-up argument in your SplashRequest or custom Lua scripts makes sure that the content is ready for scraping, avoiding missing or inadequate data extraction.

This phase permits you to instantly scrape and yield the information you need after the page has been completely rendered and is prepared for processing.

Step 6: Exporting The Scraped Data Into A Functional Format

After successful extraction of the dynamic content utilizing Scrapy-Splash, the final step involves exporting the scraped data into a functional format. Scrapy gives built-in mechanisms to export data into different formats, including JSON, CSV, and XML, making it simpler to store or process the scraped data further.

To export your data, alter your Scrapy spider to yield the data in your desired structure, as shown in the earlier step. The following is an example:

def parse(self, response):

title = response.xpath(‘//h1/text()’).get()

products = response.xpath(‘//div[@class=”product-item”]’)

for product in products:

name = product.xpath(‘.//h2/a/text()’).get()

price = product.xpath(‘.//span[@class=”price”]/text()’).get()

yield {

‘name’: name,

‘price’: price

}

Presently, you can run your spider and indicate the output format. Utilize the command as mentioned below to export the information to a JSON file:

scrapy crawl myspider -o output.json

The above command will tell Scrapy to run the spider (myspider) and save the extracted information into output.json. Moreover, you can also export it to CSV by utilizing:

scrapy crawl myspider -o output.csv

Scrapy permits numerous output formats at the same time. Furthermore, you can fine-tune your export strategy by utilizing pipelines to process or store your scraped information in databases. That flexibility makes it simple to incorporate the extracted information into different workflows or save it for future examination.

Conclusion

To conclude, the use of typical scraping techniques to handle dynamic websites with JavaScript-rendered content can be extremely tricky. Nevertheless, integrating Scrapy’s strong crawling capabilities with the JavaScript rendering power of the Splash headless browser makes Scrapy-Splash a viable choice. When working with dynamic content that is inaccessible in the original HTML response, this unification can help extract useful data effectively. Ultimately, you can successfully retrieve information from intricate web pages while ensuring that your scraping initiatives are reliable and efficient.