How To Scrape Data From RESTful APIs Using Python And Requests

Scraping data from RESTful APIs is an efficient approach to gathering structured data from web services. Different from formal scraping in which users need to extract data from HTML content, APIs facilitate immediate access to the raw data in a clean and predictable form. The integration of Python with request libraries presents a straightforward as well as useful way to interact with these APIs for data collection automation within a range of applications. The user cases range from simple data analysis to reporting to machine learning and automation. By using RESTful APIs, you can be free from addressing messy front-end code with frequently occurring layout modifications. As a substitute, you can send well-structured HTTP requests to particular endpoints and obtain definitive responses. Using the request library, both beginners and experts can smoothly communicate such communications with next to no code. This strategy can be highly effective while dealing with weather-predicting platforms, financial data services, social media APIs, and any other system that provides open and authenticated endpoints. This blog will further proceed with providing a step-by-step process of using Python and Requests to scrape data from RESTful APIs.

Step 1: Understanding The API

Before you get started with composing any code, you need to completely understand the API you will be working on. Begin by perusing the API documentation, which typically incorporates the base URL, available endpoints, mandated parameters, request strategies like GET or POST, rate limits, authentication strategies, and response formats. Understanding these attributes will help in avoiding mistakes and wasted time debugging later.

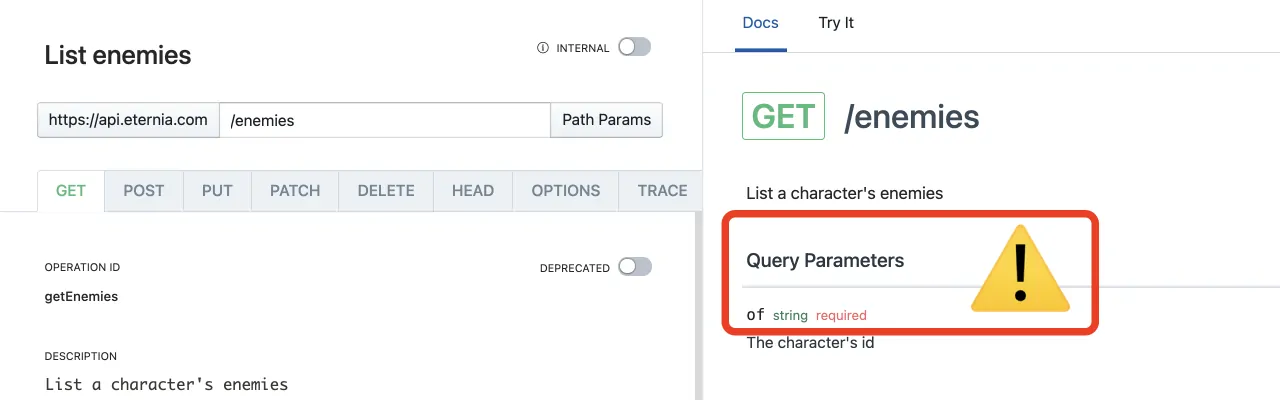

Concentrate on identifying the proper endpoint for the information you need. An endpoint could be a. specific URL that conforms to a resource or a set of resources. For instance, in a weather API, an endpoint could be. /v1/forecast to induce future climate information. Comprehend what query parameters are acknowledged, including area, date, or dialect. Moreover, check whether the API needs an API key or other forms of verification.

A few APIs return paginated information, suggesting you will only get a share of the dataset in a single call. For such possibilities, the documentation will explain how to access the following pages utilizing parameters such as page or offset.

Testing endpoints with tools such as Postman or cURL before composing code can be valuable. It will help to confirm that you are hitting the correct URL and that the response is exactly what you anticipate. That foundational step guarantees that all the following steps proceed efficiently.

Step 2: Defining The Parameters

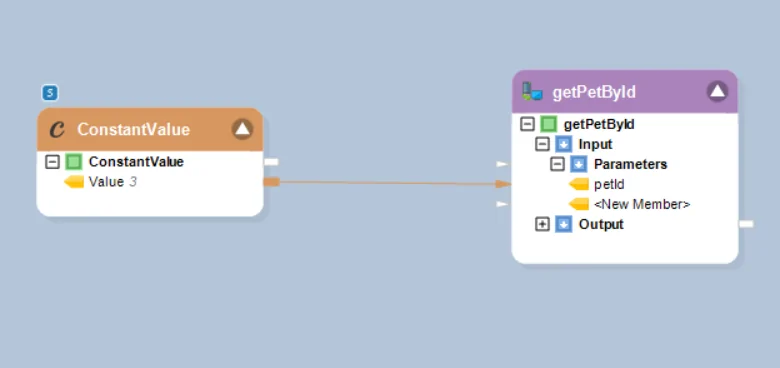

After you understand which endpoint to target, the second phase is to define the parameters required by the API to return the correct information. These parameters are generally sent as a portion of the query string in a GET request or within the body of a POST request. They can incorporate filters such as date ranges, particular IDs, location coordinates, pagination settings, sorting options, or any other criteria the API underpins.

Also, you need to prepare the request headers, which may include authentication tokens, including an API key or Bearer token, content types and user-agent information as required. Numerous APIs will not respond without the proper headers, particularly when authentication is included.

In Python, these parameters are usually passed utilizing dictionaries with the params argument for query strings or headers for request metadata when utilizing the requests library. Setting these up in an organized and reusable way makes your code clearer and simpler to adjust afterwards.

It is also a great practice to store sensitive values such as API keys in environment variables or config files, not specifically in your code, to keep them protected. Appropriately organizing your request setup prevents failed calls and enables you to maintain clean and scalable code.

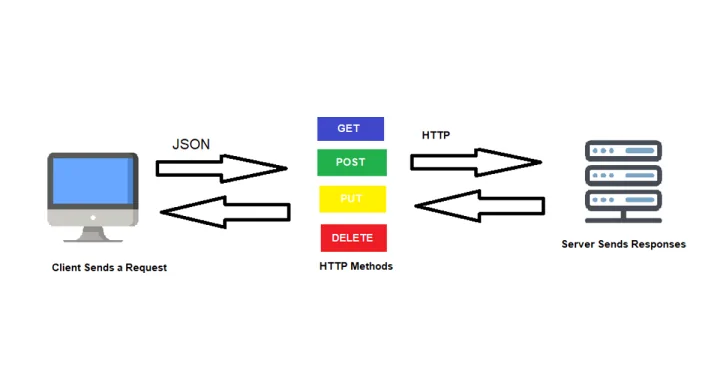

Step 3: Passing The HTTP Request

Once the endpoint and parameters are prepared, you can presently pass the genuine HTTP request to the API utilizing Python’s requests library. Most data scraping tasks include a basic GET request, though other strategies such as POST, PUT, or DELETE are utilized in different settings. Within a GET request, your parameters are sent within the URL, whereas in a POST request, they usually go within the request body.

The following is a fundamental case utilizing requests:

import requests

url = “https://api.example.com/data”

params = {“page”: 1, “limit”: 50}

headers = {“Authorization”: “Bearer YOUR_API_KEY”}

response = requests.get(url, params=params, headers=headers)

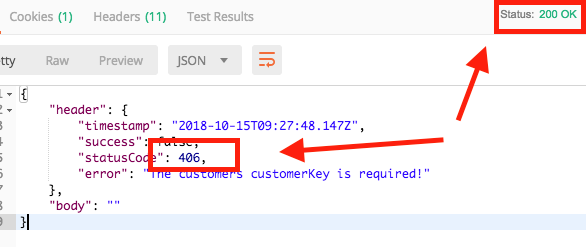

Continuously check the status_code of the response. A code of 200 implies success, whereas codes such as 400, 401, or 500 show distinctive sorts of errors like bad request, unauthorized, or server error. In case you are addressing unreliable endpoints or rate limits, look into including error handling or retry logic.

Sending the request effectively implies you are presently connected to the API and prepared to retrieve the data it provides. From here, you will move on to preparing the response effectively.

Step 4: Handling The Response

Once you have sent the request, you have to handle the response accurately to be sure the data you expect has really been returned. The primary check should continuously be the HTTP status code. A 200 status implies the request was effective. On the off chance that you get codes like 401 meaning unauthorized, 403 meaning forbidden, or 500 or server error, you need to investigate either the request format or your access rights.

Utilize the response.status_code to perform fundamental checks and response.raise_for_status() to throw an error automatically in the event that something goes wrong. It can make debugging simpler and keep your code clean.

Following, check the structure of the response. Most advanced APIs return data in JSON format, which you’ll access utilizing response.json(). However, a few might return XML or plain content. Be sure your code can handle the expected format.

Furthermore, observe for pagination indicators within the response—some APIs will not return all information right away and will incorporate a key like next_page, has_more, or a cursor value to get extra results.

Great response handling isn’t about accessing information, but also about building safeguards for unexpected behavior, empty results, or data limits imposed by the API provider.

Step 5: Extracting The Data From the Response

After you have affirmed a successful response, the fifth step is to extract the genuine data from it. Most Restful APIs yield data in JSON format, which is simple to process within Python. You will parse it directly utilizing the .json() strategy from the requests library, which converts the JSON response into a Python dictionary or list.

Here is an example:

data = response.json()

From here, assess the structure of the data object. That could be a list of things, a dictionary containing nested areas, or a blend of both. Utilize familiar Python operations to navigate and extract the regions you need—for instance, data[‘results’], data[0][‘name’], or loops to iterate over lists of records.

It is moreover imperative to regulate optional fields or nested values securely. Utilizing. .get() rather than direct key access (e.g., item.get(‘price’) instead of item[‘price’]) avoids errors when keys are lost.

On the off chance that the API returns paginated information, this phase might repeat over multiple responses—each parsed and appended to your data set.

Step 6: Data Organization And Storage

In case you are gathering data for investigation, visualization, or reporting, common storage alternatives include CSV files, JSON files, databases like SQLite and PostgreSQL, or even cloud storage platforms.

Here is an example of storing data in a CSV:

import csv

with open(‘output.csv’, ‘w’, newline=”) as file:

writer = csv.DictWriter(file, fieldnames=data[0].keys())

writer.writeheader()

writer.writerows(data)

Likewise, you can store data in a JSON file this way:

import json

with open(‘output.json’, ‘w’) as file:

json.dump(data, file, indent=2)

If you are into real-time data processing, you can employ custom logic, pandas or any other Python tools to handle the process deftly.

Conclusion

To sum up, the ability to retrieve data via APIs is essential for contemporary program development. You can design strong apps that use external data and services once you know how to perform HTTP requests, process replies, and adhere to best practices. Specifically, a RESTful API is a potent tool for effectively gathering, organizing, and sharing data. It makes it possible to retrieve structured data from web pages in a robust and adaptable manner that can be easily included in your workflow. You can eventually get clear, organized data forms and have a lower chance of being banned.